AMD Radeon R9 Fury X: Reaching out for Maxwell

At E3 this year, AMD unveiled the Radeon R9 Fury X as the answer to NVIDIA’s absolute dominance of the enthusiast graphics card market for the past half year. Of course, it’s never good for consumers when only one company is dominating the scene, and enthusiasts and gamers the world over have been hotly anticipating the release of AMD’s new flagship that will – or at least try to – put NVIDIA on the backfoot.

The Radeon R9 Fury X is a one-of-a-kind card, not least because of its use of a close-loop water cooling solution and High Bandwidth Memory (HBM). And as if to further highlight the strength of its opening gambit of 2015, AMD is drawing on its, or more specifically ATI’s, past successes for a new naming scheme for its new flagship.

Back in 1995, ATI named its first 3D accelerators Rage. This eventually spawned more cards bearing the same moniker, including the Rage Pro, Rage 128 Pro, and Rage Fury. That’s right, Fury. With the Radeon R9 Fury X, AMD is reviving an old name and also drawing on the same semantics that inspired the Rage name. The Rage cards were ATI’s first to feature 3D acceleration capabilities, and AMD may be hoping that the Fury X will be the first to mark the company’s emergence from the difficulties that have weighed it down in recent years.

Welcome to Fiji

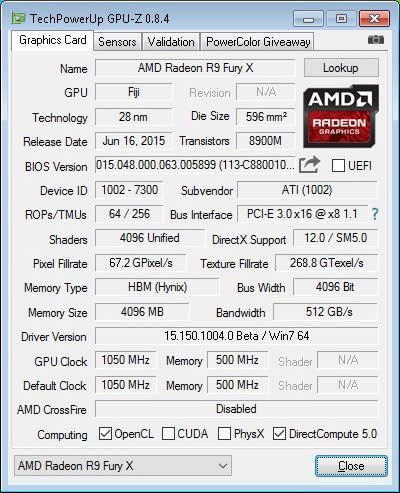

AMD has reserved its new Fiji GPU architecture for the R9 Fury X. While last year’s single-GPU flagship was the AMD Radeon R9 290X, this year’s R9 390X is merely a rebrand of the Hawaii XT GPU (now called Grenada XT) on the R9 290X.

It has also managed to cram 8.9 billion transistors onto Fiji’s 596mm² GPU die. In comparison, NVIDIA’s GM200 GPU on the GeForce GTX Titan X and 980 Ti is 601mm² and packs 8 billion transistors, which means Fiji arguably sports a higher transistor density than GM200. Of course, both GPUs are entirely different chips and utilize different architectures, so this isn’t really a good gauge of performance.

Here’s a look at Fiji’s block diagram before we venture into how Fiji differs from Hawaii:

Like Hawaii, Fiji is still manufactured on a 28nm process node and features four Shader Engines. However, it has bumped up the number of Compute Units (CU) in each Shader Engine from 11 to 16. Each CU has 64 shaders, so that brings the total number of shaders across the four Shader Engines to 4,096, up from 2,816 in Hawaii - an increase of 45%. There are also four texture filter units per CU as on Hawaii, but because there are now 64 CUs (up from Hawaii’s 44), Fiji can boast a total of 256 texture filter units, up from Hawaii's 176 - again, a substantial increase of 45%.

Fiji also retains the improvements AMD made to its third-generation Graphics Core Next (GCN) architecture in the Tonga GPU we saw on the Radeon R9 285 (rebranded as the Radeon R9 380). This mainly includes improvements to tessellation and geometry performance.

Ultimately, Fiji should deliver improved tessellation performance, shader, compute and texture filtering performance over Hawaii.

The Radeon R9 Fury X’s Fiji GPU has a core clock of 1050MHz and memory clock of 500MHz (1Gbps HBM). If this seems a little low to you, you’re right. But this is all a result of AMD’s use of HBM, which we’ll cover in the next section.

Furthermore, the low memory clock speed of 500MHz is compensated for by the super-wide 4,096-bit memory bus, resulting in a total memory bandwidth of 512GB/s. The Fury X is designed expressly to excel at 4K gaming, and this abundance of memory bandwidth is a step in this direction.

The Radeon R9 Fury X will also support DirectX 12 and new features like Frame Rate Target Control (FRTC), Virtual Super Resolution (VSR), and Asynchronous Shaders. We've already covered these features in greater depth in our review of the Radeon R7 370 and R9 380, so we won't be rehashing them here.

High Bandwidth Memory

The Fiji GPU die is also now surrounded by four stacks of HBM memory amounting to 4GB. The Fury X is the first consumer GPU to feature HBM, which AMD says will help overcome the limitations of GDDR5 memory.

We’ve covered the key aspects of HBM in a previous article already, but here’s a quick recap. As 4K gaming and other bandwidth-hungry applications begin to impose themselves on the mainstream, GDDR5 is fast exhausting its ability to meet bandwidth demands as the required increase in power consumption is becoming unfeasible.

GDDR5 runs at a 32-bit bus width, but each HBM stack has a 1024-bit bus width, which allows it provide a huge increase in bandwidth per watt.

Another crucial benefit that HBM provides is its close proximity to the GPU die itself. Both the HBM memory stacks and the GPU die sit atop a silicon-based interposer, to which they are connected by new type of interconnect called through-silicon vias (TSV). The interposer is essentially a silicon substrate for the GPU and memory dies, and through which microscopic wires connecting both memory and GPU run. The improved proximity simplifies communication and clocking and also enables the extra-wide memory bus width.

Furthermore, HBM enables significant space savings over GDDR5 because of its stacked architecture, which is how AMD has managed to manufacture a card with such a compact PCB. 1GB of GDDR5 memory would measure around 24 x 28mm, but a 1GB HBM stack would have just an area of 5 x 7mm, which translates into almost a 94% reduction.

But regardless of the much-vaunted bandwidth and power efficiency improvements enabled by HBM, 4GB may still seem like a paltry amount, especially in an era where high-end GDDR5-based GPUs can have anywhere from 6GB to 12GB of memory. In fact, AMD's own Radeon R9 390X and 390 GPUs are equipped with 8GB of GDDR5 memory.

Nevertheless, AMD apparently does not see its memory capacity as a potential performance limitation. Larger GDDR5 memory capacities have been an inevitable byproduct of the need to increase memory bandwidth by increasing memory bus width, which in turn required more GDDR5 chips. AMD says that this has actually resulted in more capacity than is actually needed, and unused frame buffer capacities effectively serve no functional purpose whatsoever. Now, because of the impressive 512GB/s of bandwidth made available by HBM, AMD no longer has the need to increase memory capacity for the sake of getting more bandwidth. Instead, it all comes down to efficiency and how effectively drivers and programs are at managing the 4GB frame buffer.

This confidence aside, AMD has still been careful to say that the design of HBM is in no way limited to 4GB only – that is, there is no cap on how many HBM stacks we can have, or how many DRAM chips can be stacked on top of one another. AMD may have decided to go with four stacks of 1GB HBM (four 256MB dies) in its first debut of the technology, but we could very well expect future generations of cards to sport larger HBM capacities, either by utilizing larger DRAM dies per stack or increasing the number of stacks. After all, the numbers game is never at a standstill.

A Premium Body

With a launch price of US$649, the Radeon R9 Fury X costs the same as its main competitor, the NVIDIA GeForce GTX 980 Ti. The latter card was launched at the start of June – somewhat pre-emptively – ahead of the Fury X, and ended up delivering performance close to that of the GeForce GTX Titan X.

If we put aside performance numbers for a second, the Radeon R9 Fury X has a clear advantage over the reference GeForce GTX 980 Ti. AMD has built a seriously premium-looking card, complete with a closed-loop liquid cooling solution.

Let’s face it. This is one beautiful card, even without going into the details of the materials and finish. The entire card is comprised of multiple pieces of diecast aluminum, where the black aluminum exoskeleton is complemented by a nickel-plated chrome finish bearing the Radeon logo. The design is topped off by soft-touch sides that feel better made than most metal-and-plastic cooling shrouds.

The card’s bottom surface features an understated dotted grid pattern that we quite like. In fact, its bottom faceplate might just be the most interesting part of the card because it can features four hex screws that can be removed to swap out the faceplate. AMD is letting you 3D print a replacement plate with your own custom design, and you can download the 3D printing model here. Enthusiast PC builders are big on customization, and we think that it’s great that AMD is making it possible for users to personalize their cards.

AMD has put a lot of effort into designing a high-quality product, and this is evident from the use of a 120mm Nidec Servo Gentle Typhoon fan on the radiator. From our tests, the fan was capable ramping up to a maximum speed of 3,000rpm. Gentle Typhoons have a reputation for being excellent radiator fans, and we’re glad that AMD didn’t just slap on a run-of-the-mill chassis fan instead.

As we’ll see later, the water-cooling loop does a great job at keeping the card cool. We also didn’t notice any high-pitched pump whine that some reviewers and users reported, so it may be that we’ve received a unit from one of the later batches.

Display connectors are located round the back of the card, and we’re slightly miffed that AMD has chosen not to include any DVI ports this time. Instead, the card has just one HDMI port and three DisplayPort outputs, so make sure you have the appropriate video cables for your setup.

Power is delivered via two 8-pin PCIe connectors. The Radeon R9 Fury X has a Thermal Design Power (TDP) of 275 watts, slightly higher than the 250 watts of the NVIDIA GeForce GTX 980 Ti and Titan X.

The Fury X boasts a row (8+1) of GPU Tach LEDs above the power connectors. They serve as a gauge of the card’s activity – only one light is lit when the GPU is idle, but the entire row will light up during a heavy gaming session. There is a single green LED that will glow when the GPU is in ZeroCore Power operation.

The LEDs can also be configured as either red, or blue, depending on the position of the DIP switches located close by.

Here’s a table showing the positions of the DIP switches and what they do:

Switch 1 Position | Switch 2 Position | LED Color |

|---|---|---|

OFF | OFF | GPU Tach Off |

OFF | ON | Red |

ON | OFF | Blue |

ON | ON | Red + Blue |

Test Setup

These are specifications of our graphics testbed:

Intel Core i7-5960X

ASUS X99-Deluxe (Intel X99 chipset) motherboard

4 x 4GB Corsair Vengeance LPX DDR4-2133 (Auto timings: CAS 15-15-15-36)

Samsung SSD 840 Pro 256GB SATA 6Gbps solid state drive (OS)

Western Digital Caviar Black 1TB SATA 6Gbps hard drive (benchmark + games)

Windows 7 Ultimate 64-bit SP1

Intel INF 10.0.20

AMD hasn't supplied us with its own reference card, so we'll be using a PowerColor-branded Radeon R9 Fury X reference board for our tests. Our Powercolor Radeon R9 Fury X shipped with AMD’s 15.15 launch drivers, and that’s what we used to when testing the card.

As the pinnacle of AMD’s 2015 lineup, it’s only right that we compare the Fury X against NVIDIA’s own top performers – the GeForce GTX 980 Ti and Titan X. We’ve also thrown in the ASUS Strix Radeon R9 390X into the mix to see how the Fury X stacks up against AMD’s other products.

Here’s a full list of the cards compared and their driver versions:

PowerColor Radeon R9 Fury X (AMD Catalyst 15.15)

NVIDIA GeForce GTX 980 Ti (ForceWare 353.30)

NVIDIA GeForce GTX Titan X (ForceWare 353.30)

ASUS Strix Radeon R9 390X (AMD Catalyst 15.15)

Benchmarks

Because this is our first review of the Fury X, we’ll be putting it through our full suite of synthetic and real-world benchmarks to gain an idea of its complete performance characteristics.

This is a full list of the benchmarks used:

Futuremark 3DMark 2013

Middle-earth: Shadow of Mordor

Crysis 3

Tomb Raider

Hitman: Absolution

Thief

For our temperature and power consumption tests, we used the Fire Strike Extreme benchmark in 3DMark 2013.

We’ve also compiled a table to show how the cards stack up against one another on paper:

| — | — |

|

— | — | — |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

— |

|

|

|

Performance Results

3DMark 2013

The Radeon R9 Fury X came in behind both the NVIDIA GeForce GTX 980 Ti and Titan X in our synthetic 3DMark benchmarks. In the least demanding Fire Strike test, it was just under 9% slower than the GeForce GTX 980 Ti and approximately 12% behind the Titan X. But despite trailing NVIDIA’s flagship Maxwell GPUs, the Radeon R9 Fury X manage to beat the Radeon R9 390X by a fairly commanding 20%. While we’re not surprised that the US$649 Fury X trails the Titan X, we’re a bit disappointed that it didn’t come closer to the similarly priced GeForce GTX 980 Ti.

However, the performance difference between the top three cards narrowed in the more graphically taxing Fire Strike Extreme (2560 x 1440) and Fire Strike Ultra (3840 x 2160) tests. This makes sense as the Fury X has supposedly geared toward performance in higher resolutions, but it still fails to match up with even the GeForce GTX 980 Ti.

Middle-earth: Shadow of Mordor

The Radeon R9 Fury X has a fairly strong showing in Middle-earth: Shadow of Mordor, a game that can chew up quite a lot of graphics memory. At a resolution of 2560 x 1600 and Ultra settings, the Fury X managed to edge out all the other cards to take first place.

However, the GeForce GTX Titan X once again came out ahead at the higher resolutions, although the Radeon R9 Fury X and GeForce GTX 980 Ti were both within a few frames of it. What’s more, the Radeon R9 390X actually had quite a decent showing against the other top-end cards at the highest resolution of 5120 x 3200, perhaps helped along by its 8GB of GDDR5 memory.

Crysis 3

Even though it was first released in 2013, Crysis 3 continues to punish graphic cards and serve as quite a demanding real-world benchmark. The GeForce GTX 980 Ti and Titan X were neck-and-neck in Crysis 3, while the Radeon R9 Fury X continued to trail behind. Both NVIDIA cards were even up to 25% faster than the Fury X at a resolution of 1920 x 1200 with 8X MSAA enabled.

The difference once again narrowed at the higher 2560 x 1600 resolution, just like we observed in 3DMark. The Fury X is proving far more capable at holding its own at higher resolutions, and it probably has to thank the extra memory bandwidth offered by its use of HBM for this.

Tomb Raider

While Tomb Raider is an older DirectX 11 game, the Fury X surprisingly displayed only modest performance, at least in comparison with the NVIDIA cards. Coming in just shy of a 100fps at a 1920 x 1200 resolution, the Fury X turned out to be almost 25% slower than the GeForce GTX 980 Ti.

However, unlike in 3DMark and Crysis 3, the Fury X failed to close the gap at the higher resolution and was left to contend with an approximately 25% deficit from both NVIDIA cards. Of course, it was still significantly faster than the Radeon R9 390X, but that is a small consolation given that it continues to trail the GeForce GTX 980 Ti which costs the same.

Hitman: Absolution

Hitman Absolution can be quite a demanding game to run with anti-aliasing turned on, and we can see that all the cards take quite a big hit to performance when we turned up 8x AA (the highest available). In the latter case, the Radeon R9 Fury X once again successfully narrowed the performance gap with the GeForce GTX Titan X and 980 Ti to within a few frames. This was the case for both our tested resolutions.

In comparison, at a resolution of 1920 x 1200 with anti-aliasing turned off, the Radeon R9 Fury X trailed the GeForce GTX 980 Ti by as much as 15%.

From what we’ve seen so far, it looks like the Fury X thrives when rendering demand is the highest. It fails to demonstrate its full potential in less demanding settings, and this would probably explain its lackluster performance in Tomb Raider, which doesn’t really tax modern, high-end graphics cards in 2015.

Thief

The NVIDIA GeForce GTX 980 Ti and Titan X once again demonstrated how close they are in performance numbers in Thief. In addition, the Radeon R9 390X came uncomfortably close to the Fury X in all our tested settings but the most demanding one.

It also took a 2560 x 1600 and Very High settings to get the Fury X to ramp up and hang in the same performance neighborhood as the two NVIDIA cards. This was also the setting that produced the most significant results, as the frame rates for our other three tests were remarkably similar with average frame rates hovering in the vicinity of the low 70s and low 80s.

It’s tempting to make a case for the Fury X and say that it is designed to do best in the most taxing scenarios, but the fact of the matter is that it still trails the GeForce GTX 980 Ti.

Temperature and Power Consumption

Operating temperatures are where the Radeon R9 Fury X finally has a chance to shine. With its closed-loop liquid cooling solution and Gentle Typhoon fan, the Fury X essentially leaves the other cards in the dust. Of course, air and liquid cooling are in entirely different leagues, but that shouldn’t detract from how good a reference cooler AMD has designed.

The Fury X posted a peak temperature of just 45°C, whereas the other cards came in around 85°C. In addition, we noticed that the Fury X cooled down a lot faster than the air-cooled cards after being subject to heavy rendering loads.

When it came to power consumption, the Fury X was relatively on par with the NVIDIA cards, although it did record a slightly higher idle consumption 93 watts. The Radeon R9 390X also stood out because of its rather high power consumption of 430 watts. The figures are in line with what we observed when reviewed the Radeon R9 290X, and the R9 390X is clearly showing that it is indeed a Hawaii GPU at heart.

It looks like the Fury X’s Fiji GPU and use of HBM has allowed AMD to catch up with NVIDIA in terms of power efficiency.

Overclocking

We used the MSI Afterburner utility to overclock the cards, while stability testing was done using 3DMark 2013. The Radeon R9 Fury X doesn’t allow users control over voltage or memory clocks out-of-the-box, so we were unfortunately limited to tweaking just the core speeds. We were able to achieve an overclock of 1130MHz, up from the stock speed of 1050MHz, which translates to a gain of about 7%.

The boost in clock speeds that we achieved translated into an approximate gain of 5% in 3DMark Fire Strike scores. Performance improvements were far less significant in Fire Strike Extreme and Ultra, and you probably will not observe any tangible gain in games.

However, reports have emerged recently that it’s possible to unlock memory overclocking by activating AMD Overdrive in the Catalyst Control Center. Users are then able to ramp up the memory clock speeds, and the changes appear to be reflected in GPU-Z. Not to put a dampener on things, but it seems that AMD has responded to an enquiry from WCCF Tech and said that although GPU-Z is reporting a higher memory frequency, the change has not in fact taken place on the hardware itself.

The other AMD card tested also wasn’t a very capable overclocker. We were only able to achieve a measly 50MHz overclock on the core speed, and the card even displayed signs of throttling in Fire Strike Ultra. We suspect this is due to the card hitting its power limits,

On the other hand, the two more power efficient NVIDIA cards proved to be highly overclockable. The GeForce GTX 980 Ti posted over a 10% gain in Fire Strike Ultra, a fairly respectable increase over its stock speeds.

A good contender that arrived a little too late

AMD needs a win with the Radeon R9 Fury X. No company wants to always be seen as the budget and value-for-money option, and the Fury X is supposed to be AMD’s attempt at reasserting itself in the high-end graphics card market.

If AMD had released the Fury X a little earlier, perhaps alongside or slightly after the NVIDIA GeForce GTX Titan X, we’d have unreservedly said that this was the enthusiast card to get. It manages to deliver about 80% of the Titan X’s performance at a far more palatable price. And when combined with its excellent liquid cooling solution, tantalizing design and build quality, and compact size, enthusiasts should have been clamoring all over it.

But then we have the elephant in the room – the NVIDIA GeForce GTX 980 Ti. At US$649, it goes head-to-head with the Radeon R9 Fury X, yet manages to deliver performance that comes within inches of the GeForce GTX Titan X. Of course, NVIDIA has put the same GM200 GPU on the GeForce GTX 980 Ti, disabled a couple of SMM units, and slapped an attractive price on it. The result is a card that dishes out most of what the Titan X delivers, is consequently faster than the Fury X, and costs the same as the latter.

As a result, the GeForce GTX 980 Ti is really what the Radeon R9 Fury X could have, or should have, been. The timing of the card’s release was no coincidence, and NVIDIA probably planned it to pre-empt the impending release of the Radeon Fury X and tighten its hold on the upper echelons of the graphics card market. And from the looks of it, it looks like its strategy has succeeded. It’s difficult to recommend the Radeon R9 Fury X over the GeForce GTX 980 Ti when the latter offers better performance at the same price, in the US at least.

Locally, the PowerColor Radeon R9 Fury X is a bit more attractive because it retails for S$1,009, which is a bit cheaper than equivalent GeForce GTX 980 Ti reference cards. For instance, the Gigabyte GeForce GTX 980 Ti is going for S$1,080. Custom GeForce GTX 980 Ti cards from the likes of ASUS, MSI, and Gigabyte will cost even more (up to a S$100 more), but then they will deliver even more performance over the Radeon R9 Fury X with their factory overclocks, so its up to users to determine if it's going to be worth it.

The way it looks, local customers will indeed be able to get the performance they pay for. But a slightly cheaper price aside, the Fury X is still worth your consideration, thanks to its design and excellent cooling. Oh, and did we mention we love the option to customize your own front plate? The Fury X is a far stronger contender at higher resolutions and more demanding settings, so if you game at 2560 x 1440 and up and like to turn up the eye candy, the card is definitely worth a look. Furthermore, the reference NVIDIA GeForce GTX 980 Ti and assorted custom cards simply can't hold a candle to the Fury X's chilly temperatures and winning design. So if cooling and aesthetics are important considerations for you, the Fury X is a more enticing option.

It also has one other key feature going for it – its size. At just 194mm in length, the Fury X is a lot shorter than competing NVIDIA cards. Many custom versions of the GeForce GTX 980 Ti from add-in card manufacturers are pushing 300mm in length, but the compact size of the Fury X means that it will fit in slightly more compact cases. However, you'll still have to make space for the 120 x 120mm radiator, which happens to be fairly thick at around 45mm. So if you also happen to have a liquid-cooled CPU, you may want to check that you have space and clearance for an additional radiator.

The bottom line? If you game at high resolutions and want a good-looking card that doesn’t require you to rob a bank to get it, you should definitely consider the Fury X. But if you’re looking for as much performance as possible at around the same price and don’t care much for liquid cooling, there’s really no beating the NVIDIA GeForce GTX 980 Ti.