Engadget has been testing and reviewing consumer tech since 2004. Our stories may include affiliate links; if you buy something through a link, we may earn a commission. Read more about how we evaluate products.

Are Facebook and Twitter ready for a contested election?

The companies have spent months planning for the worst,

For Facebook and Twitter, the 2020 presidential election has required a different kind of preparation than in previous years. Yes, the companies are still watching out for foreign influence campaigns and other security threats, but there are also an entirely new set of circumstances to contend with.

The coronavirus pandemic means a record number of people are expected to vote by mail, which could delay results well past election night. There’s also a far-reaching misinformation pandemic. Dangerous conspiracy theories, like QAnon, have more followers and are more mainstream than ever before. And there’s a sitting president who has repeatedly used his social media accounts to spread baseless claims about election fraud, and has suggested he may not accept the outcome of the election.

It’s a combination that could be particularly disastrous for social media platforms, which are eager to avoid repeating the mistakes of the last presidential election. And the stakes this year are even higher, as viral conspiracy theories and misinformation could easily fan the flames of any number of election-day nightmare scenarios.

“I'm particularly worried about misinformation either around election day that gives false information about say the candidates’ health, or some other important issue relevant to the election that might be difficult for the [social media] platforms to respond to in real time,” says Rick Hasen, a professor at UC Irvine who specializes in election law. “I'm also worried about a post-election period, if there is a close election, and you have a candidate declaring victory. There could be a lot of misinformation about ballots that have not been counted yet, and about claims of fraud or some kind of wrongdoing.”

Election experts aren’t the only ones considering these scenarios. Facebook and Twitter are also gaming out the many things that could go wrong. Both companies have implemented a number of changes to combat viral misinformation and prepare for an uncertain election aftermath.

Planning for the worst

Perhaps the most striking thing about the flurry of policy changes Facebook and Twitter have laid out over the last several weeks is that both companies seem to be planning for some pretty bleak outcomes.

Twitter, for example, made several election-specific changes to its civic integrity policy. And while the new rules address how the service plans to deal with unverified claims of “election rigging” or a candidate who declares victory before results have been finalized, the policy accounts for something even darker: misinformation that could incite “unlawful conduct to prevent a peaceful transfer of power or orderly succession.”

“Twitter plays a critical role around the globe by empowering public conversation, driving civic participation, facilitating meaningful political debate, and enabling people to hold those in power accountable,” Jessica Herrera-Flanigan, Twitter’s VP of Public Policy for the Americas said in a statement. “That’s why, in the lead up to Election Day 2020, we’ve made a number of significant policy, product and enforcement updates to ensure that we’re prepared to protect the integrity of the US election conversation on Twitter.”

Meanwhile, Facebook has reportedly spent the last several months gaming out dozens of potential scenarios and “contingency plans,” The New York Times reported this summer. Among the potential outcomes that have been considered: how Facebook should react if Trump uses the social network to delegitimize the result or claim some kind of fraud had taken place. Nick Clegg, the company’s head of global affairs, has also said Facebook has plans in place in case the election aftermath is ”extremely chaotic” or even violent.

The exact details of these scenarios, as well as Facebook’s exact plans to deal with them, are still unclear. The Wall Street Journal reports the company may use the same kinds of But the social network has announced some significant changes in recent weeks that offer some insight into what its priorities may be.

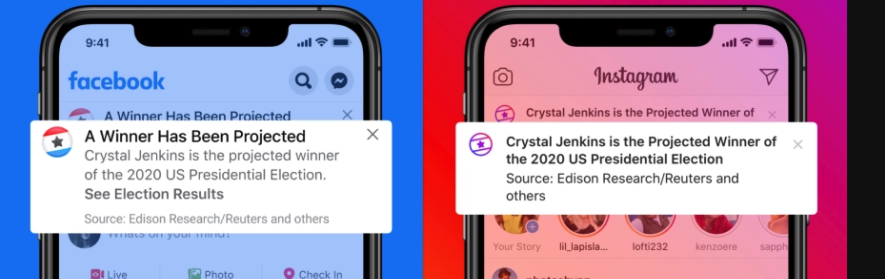

For example, the company has said it will halt political ads for an unspecified period of time after the election (Facebook will also bar new political ads from its platform during the week prior to election day.) It’s also taken some pages out of its COVID-19 playbook with a “voting information center,” and in-app messages explaining the ballot-counting process. Additionally, the social network previewed separate PSAs that will appear in the event one candidate “declares premature victory” or disputes the election result.

These are real concerns for Facebook. Mark Zuckerberg told employees that some of its recent policy changes, like a crackdown on QAnon and militia groups, are the result of “an increased risk of violence and unrest, especially around the elections,” BuzzFeed News reported.

While some of this may sound alarmist, these are more than just hypothetical scenarios. Facebook and Twitter have been inundated with conspiracy theories and misinformation about the election. Disinformation about voting, particularly around voting by mail, has been rampant. Complicating things for social media platforms: the president is the source of many of these claims. That’s forced Facebook and Twitter into the uncomfortable position of labeling or fact-checking the president’s claims.

It’s not just a Trump problem, though. Viral stories of “mail dumping” have been distorted on social media by the right and the left to create misleading narratives about the election, according to the Election Integrity Partnership, a joint project between researchers at Stanford, University of Washington, Graphika and the Atlantic Council. These stories, which are often shared out of context, could “preemptively undermine faith in election results,” the researchers warn.

They’ve spotted other worrying trends, too. Like a QAnon-linked narrative that we’re in the midst of a“color revolution,” a term conspiracy theorists are using to preemptively explain a potential Trump loss. If the president loses his bid for re-election, then it will be the result of more “deep state” meddling, and give conspiracy theorists a new narrative to latch onto.

“For the first time, powerful American politicians and partisan media influencers are attempting to preemptively delegitimize the validity of the election itself,” researchers at the Election Integrity Partnership explained.

The shadow of 2016

These kinds of concerns also underscore just how much has changed since the 2016 presidential election, when social media companies were completely unprepared for Russia’s influence campaigns. Since then, the social media industry has come together to fight these kinds of foreign influence operations, and has seen a lot of success.

“Where we've seen the most progress between 2016 and 2020, is likely on the foreign interference side,” says Camille Francois, Chief Innovation Officer at Graphika, a firm that tracks disinformation on social media. “In 2016, the idea that there were sophisticated influence operations on social media by foreign actors to target elections wasn't something that was a core preoccupation of the platforms.”

Four years after Zuckerberg first called it a “crazy idea” that Facebook could have impacted the election, the company is much more attuned to threats than it once was. This year, Facebook has uncovered a couple operations linked to Russia Internet Research Agency, the infamous troll farm it used in 2016, but they haven’t been nearly as successful or widespread as they once were.

That’s not to say that foreign interference isn’t an issue this year. In recent weeks, intelligence officials have warned that both Russia and Iran are trying to influence the election. And in Russia’s case, state hackers have “targeted dozens of state and local governments and aviation networks,” according to The New York Times. But experts have also cautioned that a more pressing concern may not be how a foreign adversary could meddle in the election, but the perception they may do so, or what’s known as “perception hacking.”

The FBI and Cybersecurity and Infrastructure Security Agency (CISA) warned of this last month, noting that claims of hacked voter information are meant to “undermine confidence in U.S. democratic institutions” by manipulating public opinion. Facebook officials have issued similar warnings.

“On the one hand, it’s important to report misleading information that could be dangerous. On the other hand, by doing this you give it oxygen,” Francois, said. “If you miss the mark, you end up really feeding either conspiratorial stories or giving an acceleration boost to misinformation or even influence operations.”

Avoid becoming part of the problem

The sheer amount of misinformation and disinformation platforms are dealing with also helps explain why the companies have turned some of their efforts to reducing virality.

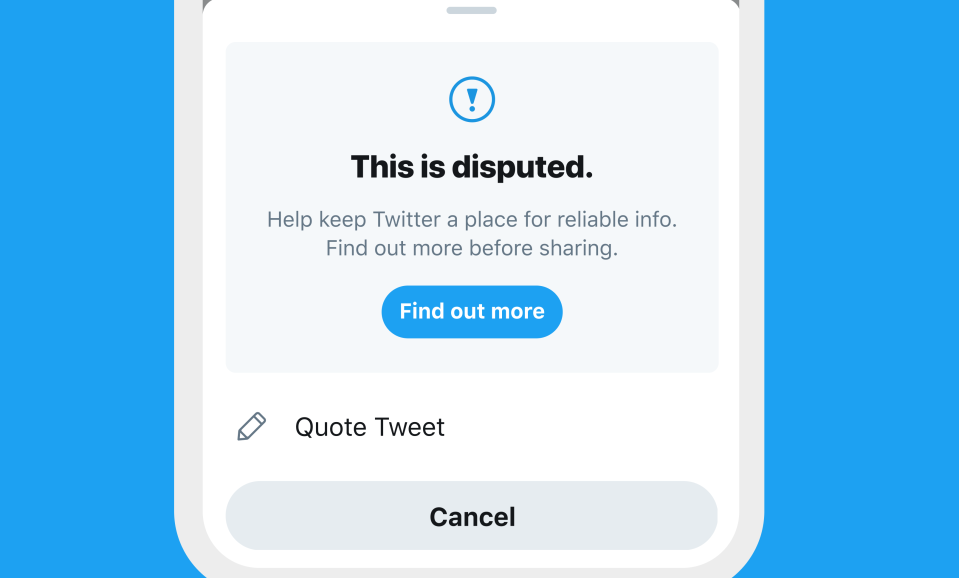

Twitter has introduced a series of PSAs about voting and the election that are designed to preemptively debunk misinformation before it goes viral. The company is also encouraging users to Quote Tweet instead of Retweet, to minimize blind sharing without adding anything new. It may seem like a small change, or even an annoyance, but the company believes that adding even a small amount of friction to the process can encourage more thoughtful sharing and less of the knee-jerk rage shares that often fuel viral narratives. Facebook has employed a similar strategy in down-ranking posts that are debunked by its fact checkers in order to make them less visible to users.

Likewise, Facebook has reportedly considered using the same kind of emergency tools previously used in countries like Myanmar and Sri Lanka. These last-ditch measures could allow the company to prevent posts from going viral or change what appears in users’ News Feeds, according to The Wall Street Journal.

These tactics don’t always have the intended effect, though. When the New York Post published a story about emails purportedly found on a laptop belonging to Joe Biden’s son, Facebook and Twitter took steps to pump the brakes on its spread. Facebook opted to down-rank it to slow it down, while Twitter blocked it entirely before eventually reversing its decision. But not before its actions enraged almost the entire Republican establishment. The blocking may have done more harm than good, some argued, as it only encouraged more people to read and share the story.

That’s why social media users also shouldn’t rely on Facebook and Twitter to get it right every time (and history has shown they often don’t). Election experts caution that it’s equally important for people to be extra cautious about what they post and share.

“People should think before they share a viral post, especially if it conforms with their own worldview,” says Hasen, the UC Irvine professor. “Sometimes when news appears too good to be true, it actually is. It turns out to be false information.”