NVIDIA G-Sync 101: Everything you need to know up until now

This article was first published on 23rd April 2016 and republished due to its relevancy.

Solving the perennial problem of stutter and lag

Gaming monitors used to be characterized by two simple features – fast pixel response times and high refresh rates. That changed in 2013 when NVIDIA first introduced us to G-Sync, a variable refresh rate technology that it said was the ultimate solution to the lag, stutter and screen tearing that have continually dogged gamers.

By synchronizing the monitor’s refresh rate with the frame render rate of a compatible NVIDIA card, G-Sync practically guaranteed a smooth and tear-free gaming experience for the first time. Indeed, in our first impressions of the technology over two years ago, we immediately noticed how G-Sync kept the gameplay butter-smooth, whereas a lesser monitor without G-Sync faltered and exhibited noticeable stutters under identical conditions. Once you experience the difference, you probably might have a hard time accepting regular displays.

G-Sync has come a long way since then, at least in terms of adoption. While initially confined to a limited number of high-end monitors, G-Sync has since made its way onto notebook displays and a growing number of gaming monitors (even curved ones!). Now, if you want to head out and buy a gaming monitor, you’ll have to decide if you want one that supports G-Sync as well.

Unfortunately, G-Sync monitors don’t come cheap, especially when compared to monitors that support FreeSync, AMD’s rival variable refresh rate technology. Because of the need to integrate a dedicated G-Sync module, which amounts to added costs on the part of manufacturers, G-Sync monitors often come with a significant price premium. For instance, the 27-inch Acer XB280HK display costs S$1,099, while the ASUS ROG Swift PG279Q is even more expensive at S$1,369. And if you want a curved display, Acer's Predator X34 is S$1,499.

But even if you’re willing to swallow the upfront costs of a G-Sync capable monitor, you’ll still have to make sure that you have a compatible graphics card. It goes without saying that AMD cards are a no-go, and you’ll require at least an NVIDIA GeForce GTX 650 Ti Boost or newer, and have driver version 340.52 or higher.

With that said, G-Sync may still not be right for everyone, and there are even certain scenarios where you may not want to have G-Sync enabled. Read on to find out how G-Sync works, and how you can get the most out of it.

Before G-Sync, there was V-Sync

In the absence of G-Sync, gamers might experience either one of two issues – stuttering or tearing. The first generally occurs when frame rates are low, and is commonly referred to as lag, while the second tends to present itself when frame rates are too high and exceed the maximum refresh rate of the monitor.

The link between stuttering and low frame rates is clear. The graphics card needs to render a certain number of frames per second in order to deliver a playable experience. This figure is generally in the range of 20 to 30fps for single-player games, although players of fast-paced, reaction-based shooters will require a far higher figure (usually over 100fps at the very least).

But when it comes to screen tearing, that’s when a powerful graphics card can become more of an annoyance than a blessing. For instance, it would be a problem if your card was pushing 120fps on a 60Hz monitor, because the monitor can only handle 60 frames a second. The monitor essentially cannot keep up with your graphics card, and when a new frame is rendered in the middle of a scan interval, sections of two different frames are displayed on your screen, creating a disjointed image with horizontal tears.

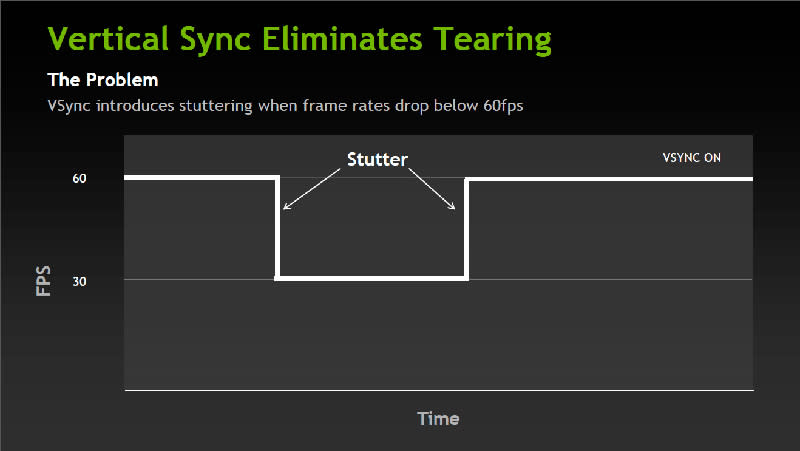

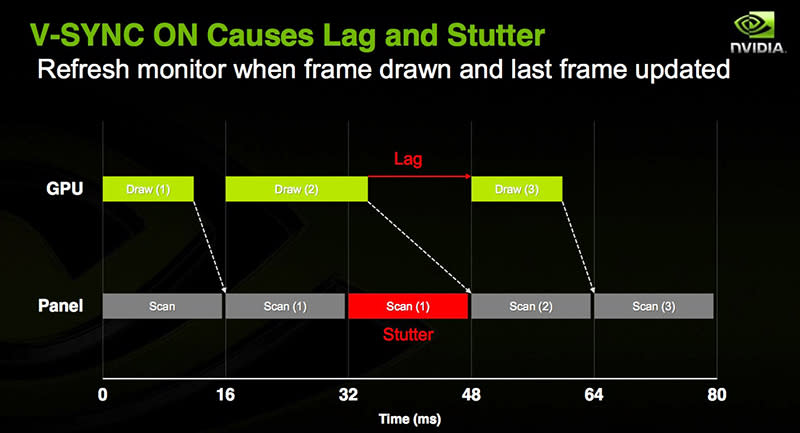

The solution to that used to be V-Sync (or vertical synchronization), which is a fairly crude method at that. V-Sync helped to eliminate screen tearing by forcing the graphics card to wait for the monitor to be ready to display the next frame, effectively limiting the frame rate output of your card to the maximum refresh rate of your monitor. That’s all nice and well if you have a powerful-enough card that never drops below the maximum refresh rate, because if that happens even once, stuttering occurs.

V-Sync responds to frame rate dips by dropping the maximum cap even further, to 30 or 45fps for example. If frame rates increase, the cap is automatically raised to 60fps again. These changes in the frame rate cap can occur quite frequently in graphically-intensive games, resulting in noticeable juddering that can put quite a dampener on the entire experience. Consistent frame rates play a big part in determining whether or not you get fluid gameplay, which is why the rapidly alternating frame rates cap are such a problem, even if 30fps may appear more than enough for some folks.

Furthermore, when the graphics card cannot keep up with the monitor’s fixed refresh rate, the panel ends up displaying the same frame twice over more than one scan interval while waiting for the next one to be rendered, which contributes to the choppy experience as well.

And even if none of these issues occurred, V-Sync can introduce other unwanted issues like markedly increased input lag, which decreases the overall feeling of responsiveness. One of our most unpleasant experiences was in Portal 2, where turning V-Sync on created so much delay in our mouse movements that we were willing to turn it off and put up with the huge amounts of screen tearing that occurred while Wheatley was trying to bring the whole facility down.

How does G-Sync solve all this?

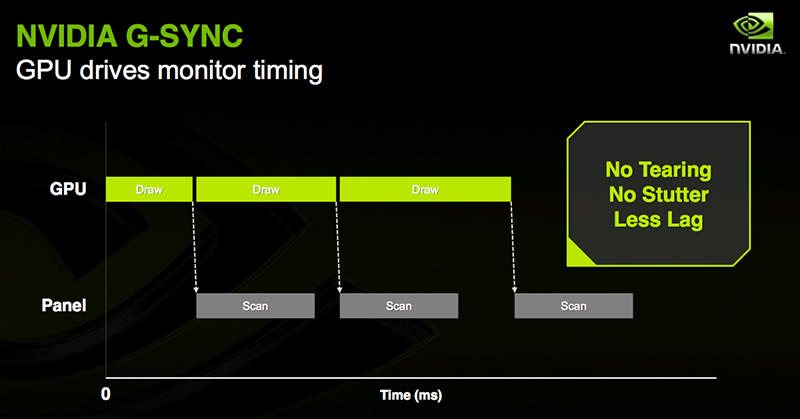

With G-Sync, there doesn’t have to be a trade-off between screen tearing or input lag. Because of the dedicated G-Sync module that replaces the monitor scaler, NVIDIA has control at both ends of the graphics pipeline, from when the frame rate is rendered by the GPU till it is displayed on your screen.

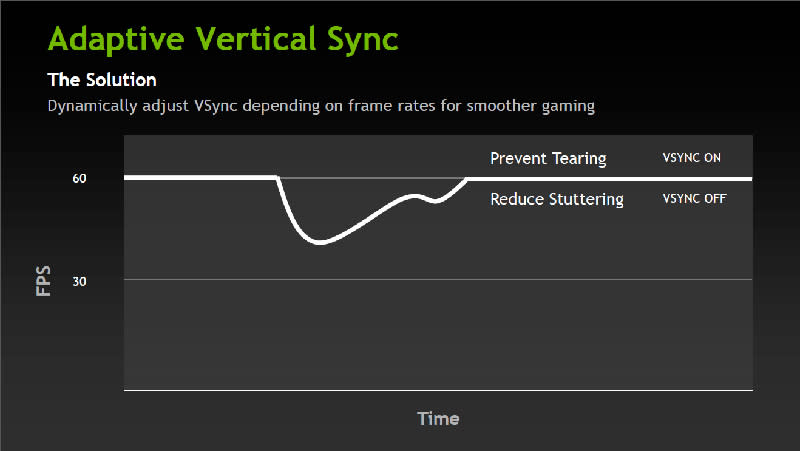

Do note that this is nothing like Adaptive V-Sync, which was arguably a stopgap measure on NVIDIA’s part to fix the issues with V-Sync. All Adaptive V-Sync does is turn V-Sync off when the frame rates drop below the V-Sync cap in order to minimize stuttering; when frame rates exceed the cap, it turns it back on.

Instead of having to deal with a fixed refresh rate, G-Sync varies the panel’s refresh rate in accordance with the frame rate output, so the display is able to accept the frame as soon as the GPU has rendered it. This means that neither the display nor the GPU have to wait for each other, which translates into smoother gameplay, lower input lag, and improved responsiveness. The variable refresh rate also means that the panel will not have to draw on the same frame just because the graphics card cannot keep up, thus minimizing potential stuttering issues.

At the same time, tearing is prevented as the GPU polls the display to find out if it is in the middle of a vertical blanking interval (or the time between frames in layman's terms) so it will not output a frame in the middle of a scan interval.

On top of that, G-Sync also has a way of handling situations in which the frame rates fall below the minimum refresh rate of the monitor. NVIDIA has been sparse on the details of exactly how this works, but that hasn’t stopped various news sites from speculating on the details.

The prevailing theory is that NVIDIA uses some sort of frame repeating in order to continue to take advantage of G-Sync, essentially repeating frames alongside the variable refresh rate instead of reverting to the minimum refresh rate. Let’s say we have a monitor with a refresh rate between 30Hz and 144Hz. If frame rates fall to 29fps for instance, the display will end up refreshing at 58Hz, with each frame drawn twice and timed to coincide with the monitor refresh interval to maintain a stutter- and tear-free experience.

And should your card prove capable of pushing out frame rates above the maximum refresh rate, the monitor will simply operate as if V-Sync were enabled, with the frame rate capped at whatever the maximum refresh rate is.

What do I need?

Short of running out to get a G-Sync capable notebook, you’ll need to make sure you have the right card, monitor, drivers, and even cable to get G-Sync working properly.

The monitor part is an absolute no-brainer. G-Sync capable monitors are clearly labeled and come with a dedicated G-Sync module in place of the regular scaler. A variety of brands manufacture G-Sync monitors to date, including the likes of Acer, AOC, ASUS and BenQ.

And unlike AMD’s recently-introduced Low Frame Rate Compensation (LFRC) technology, which requires a monitor with a maximum refresh rate at least 2.5 times that of the minimum, any G-Sync monitor will allow you to enjoy the full benefit of the technology. Essentially, you’ll only have to worry about picking a G-Sync monitor that fits your budget and preferences (resolution, size, design etc.).

Next, you’ll need a compatible graphics card and updated drivers. As mentioned earlier, NVIDIA requires a GeForce GTX 650 Ti Boost (not the same as the GeForce GTX 650 Ti) at the minimum and driver version 340.52 or higher, and AMD cards will not work at all. For more details on AMD’s own variable refresh rate technology, you can check out our coverage on FreeSync here and here.

Finally, you need a DisplayPort 1.2 cable. However, while the first wave of G-Sync monitors was limited to DisplayPort only, newer G-Sync monitors like the ASUS ROG Swift PG279Q feature an updated module that allow inputs over HDMI 1.4. Still, G-Sync remains limited to DisplayPort only, unlike AMD’s FreeSync, which recently became available over HDMI.

When will I not want to have G-Sync enabled?

But for all the praises that have been sung about the technology, there are still certain scenarios where more discerning users may want to turn G-Sync off. This mostly applies to twitch reaction games like Counter-Strike: Global Offensive, where latency must be kept to an absolute minimum and which play best at extremely high frame rates (200 to 300fps).

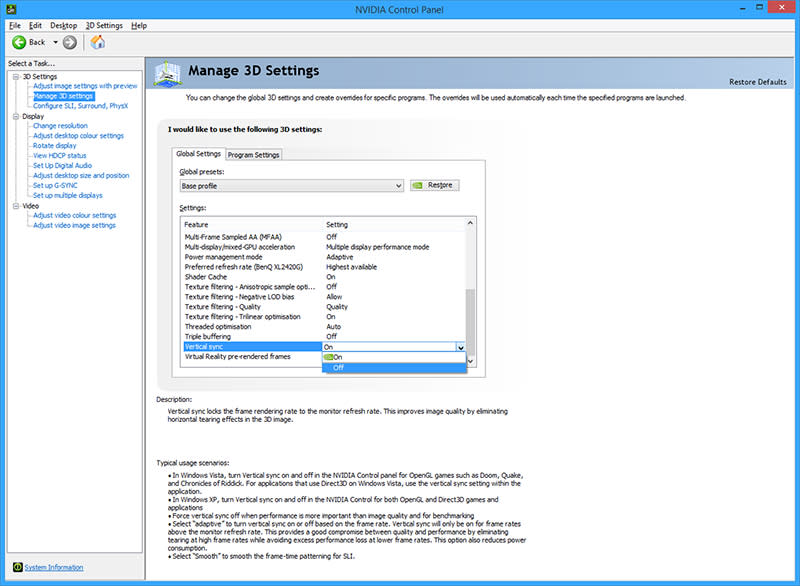

In a driver update last May, NVIDIA added the ability for users to turn G-Sync off when frame rates are above the maximum refresh rate cap. To do so, simply open the NVIDIA Control Panel and head to ‘Manage 3D Settings’ sub-menu. Look for the V-Sync dropdown menu and set it to ‘off’. NVIDIA says that this might reintroduce tearing, but you can expect slightly reduced input lag.

The control panel lets you fix the settings for all applications, but a separate ‘Program Settings’ tab also gives you the option to create custom graphics settings for individual games, so you can opt to disable G-Sync for fast-paced reaction shooters and enable it for more demanding single-player titles automatically without having to fiddle with the settings every time.

With that said, G-Sync makes things a lot simpler compared to V-Sync. The potential problems introduced by the latter meant that you had to consider multiple factors and individual use cases when deciding whether or not to enable it. For instance, if you were consistently exceeding your monitor’s refresh rate, you might decide to leave it off, provided tearing was not a huge issue. Then again V-Sync plays differently with different games, so the perceived input lag might be bearable on one title but almost unplayable on the next, which would create another conundrum (tearing or higher latency?) for the user.

Fortunately, G-Sync’s markedly improved implementation means that users no longer have to choose. For the vast majority of users, leaving G-Sync on will work just fine, regardless of the genre of games or the average frame rates they are getting.

Here’s what we think in a nutshell. If your card is consistently exceeding the maximum refresh rate of your monitor, and if not much tearing is present (games which require you to swipe left and right very often and quickly are more prone to exhibit tearing), you can leave G-Sync off and enjoy a maximally responsive experience. However, tearing is less evident on higher refresh rate panels – 120Hz or 144Hz for instance – so you might still want to leave G-Sync on if you have a 60Hz G-Sync panel.

But if you have a less powerful card that pushes frame rates in between your monitor’s refresh rate range, you’ll benefit the most from G-Sync’s ability to vary your screen’s refresh rate. In scenarios like this, regardless of whether you have a 60Hz or 144Hz panel, leave both G-Sync and V-Sync enabled.

Finally, you may have come across mentions of a certain "performance hit" with G-Sync. We said earlier that the GPU polls the display to find out if it's in the middle of a scan interval. This polling takes about 1ms, and is what's responsible for imposing the performance overhead. However, the overhead in question is minuscule and not something you'll notice in practice (if it is even measurable in benchmarks at all), so we wouldn't even worry about it. In fact, NVIDIA has said that it aims to eventually eliminate polling entirely.

It may seem strange to discuss whether to enable G-Sync or not, especially when anyone who even has the option to enable G-Sync clearly invested in a compatible monitor. However, every game responds differently to G-Sync, and we felt that it was important to highlight the fact that G-Sync may not always be the best option for every situation.

Should I get on board the G-Sync train?

However, as with all things, it’s important to calibrate your expectations appropriately. G-Sync can indeed improve your gameplay experience, but just how far you’ll be able to perceive the improvement will vary according to your setup.

Suffice to say, owners of 60Hz displays and less powerful graphics configurations will see the most benefit. Because higher refresh rate panels refresh more often per second, the amount of time that one frame will persist over multiple scanning intervals if the GPU cannot keep up is reduced. This means that things like stuttering will be less apparent.

Similarly, a weaker card will benefit more from a G-Sync display’s ability to synchronize with its frame rate output and deal with inconsistent frame rates, as opposed to a more powerful one that consistently pushes out more than enough frames.

So if you’re constantly struggling with whether to enable or disable V-Sync on your 60Hz display because of a weaker setup, we’d say that there’s plenty of reason to upgrade. But if you own a 120Hz panel with a reasonably powerful graphics card, a switch to a G-Sync display may not be quite the seachange that you expected.