NVIDIA GeForce GTX 1080 review: A new king is crowned

Note: This article was first published on 18th May 2016.

You know you want this card

Pascal has landed at last. While 2014 saw the release of NVIDIA’s second-generation Maxwell cards in the form of the NVIDIA GeForce GTX 980 and 970, 2015 was devoid of any new architectures to further improve performance and efficiency. Instead, we had the GeForce GTX Titan X and 980 Ti, cards that served up beastly performance but were still based on the same Maxwell architecture as their lower powered counterparts.

But enough of that already. There’s a new claimant to the tile of most powerful single-GPU card, and that’s none other than the recently announced NVIDIA GeForce GTX 1080. And as it turns out, this is a card with a lot of firsts to its name. Not only is it – alongside the GeForce GTX 1070 – the first to be based on the 16nm FinFET process, it is also the first and only card to feature GDDR5X memory.

Those of you who wanted to see HBM2 on a consumer Pascal card may be disappointed, but GDDR5X does come with its share of improvements over the older GDDR5 standard, which we’ll go into further detail later on.

But perhaps the biggest trump card that NVIDIA is holding on to is the claim that the GeForce GTX 1080 is actually faster than the Titan X, while also costing a lot less. The Titan X was the previous king of graphics performance, but it did cost US$999. So at “just” US$699 for the Founders Edition (or US$599 for cards from add-in board partners), the new GeForce GTX 1080 is shaping up to be a seriously tasty morsel for gamers and enthusiasts.

Founders Edition: Just a fancier name for reference cards

With the new Pascal cards, NVIDIA also introduced for the first time something it called Founders Edition cards (where did the apostrophe go?). This had more than a few of us scratching our heads at first. For one, these looked like regular reference cards, but NVIDIA actually gave the impression that these cards would feature speed-binned chips, in other words, chips that had been cherry picked for the best overclocking potential.

On top of that, there was the very confusing price – while reference cards are usually the cheapest of all the available variants of a certain card, these Founders Edition cards were priced at US$699, a full US$100 more than the US$599 price now being attributed to cards from NVIDIA’s partners. And considering that custom cards from manufacturers like ASUS, MSI, and Gigabyte usually feature bonus features like more powerful custom coolers and factory overclocks, the price difference seemed even more strange.

Was NVIDIA really going to sell a less powerful card for more? Furthermore, just what, if anything, was so special about these newfangled reference-looking cards?

As it turns out, we might have been somewhat misled. We now know that these Founders Edition cards are really just reference cards with a new name. All things “reference” have a slightly boring ring to them, and NVIDIA may have been looking to break out from the perception that its own cards were, well, too vanilla.

There’s also another obvious motivation behind NVIDIA’s new Founders Edition cards – revenue. NVIDIA wants to turn these Founders Edition into their own line of profit, slapping the US$100 premium on the cards as an additional profit margin. As a result, these Founders Edition cards are also going to be available throughout the production lifetime of the card, unlike previous reference cards that became increasingly difficult to find as time wore on.

There may even be a reason for you to shell out more for a Founders Edition card. As Kyle Bennett of HardOCP noted recently, the cards NVIDIA produced in-house have traditionally being regarded as one of the better specimens of a particular GPU. Ultimately, it looks like you’re coughing up additional cash for two things – the NVIDIA brand, and the assurance that the company behind all these GPUs is including the best components on board for a more reliable experience.

But even if that's true, we can't help but think that NVIDIA is only able to do this because of the lack of competition from AMD. There doesn't seem to be an answer to NVIDIA forthcoming from the red camp, especially in the high-end market, which is what allowed the green giant to flex its pricing muscle.

Either way, consumers lose out. However, Founders Edition cards will be available from NVIDIA’s partners as well, so you’re at least not limited to making a direct purchase from NVIDIA itself if you want one of these.

Is it worth it? Well, it’s all that’s going to be available when the GeForce GTX 1080 launches on 27 May, so you may have no choice if you absolutely cannot wait to get your hands on the card.

Meet Pascal

For all the talk about a new architecture, this actually isn’t the first time we’ve seen Pascal. It first showed up in NVIDIA’s Tesla P100, which was announced back in April at GTC 2016. But that was a data center GPU based on the GP100 chip, used for powering huge server arrays and training deep learning networks, whereas the GeForce GTX 1080 is an unabashedly gaming-oriented card featuring the GP104 GPU. 4K gaming? Check. Virtual reality? Check. New features for lower latency and smoother gameplay? Check, check, check.

This is also one of the highest clocked, if not the highest clocked, GPU we’ve seen in recent years. With a base clock of 1,607MHz and boost clock of 1,733MHz, the GP104 GPU is clearly reaping the benefits of the more efficient 16nm FinFET manufacturing process.

For a clearer idea of what 16nm FinFET does, you only have to look at the card's TDP. This is 180 watt card that's more powerful than both the GeForce GTX 980 Ti and Titan X, both of which are 250 watt cards.

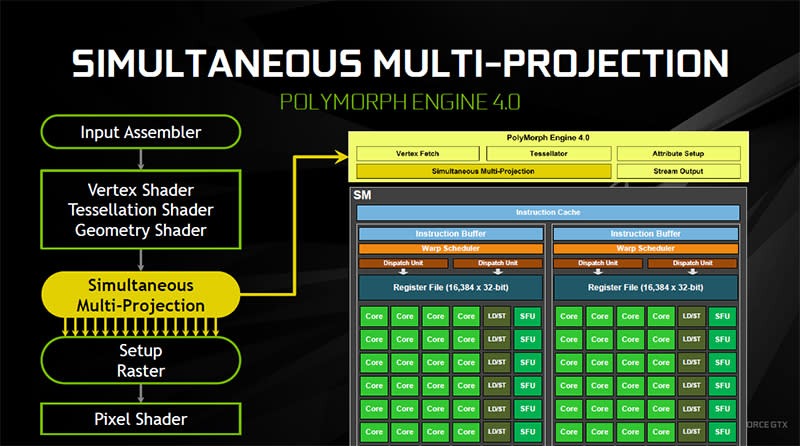

Here’s a look at the block diagram of the GP104 GPU. The GeForce GTX 1080 features four Graphics Processing Clusters (GPCs), which are in turn comprised of five Streaming Multiprocessors (SMs), for a total of 20. Every SM is also paired with a Polymorph Engine that handles things like tessellation and perspective correction, and the both combine to form what is called a Texture Processing Cluster (TPC).

In addition, each SM houses 128 CUDA cores, which brings the total number to 2,560, 25 percent more than the GeForce GTX 980. However, that’s still shy of the GeForce GTX 980 Ti’s 2,816 CUDA cores, but as we’ll see later, performance isn’t dictated solely by the number of cores.

And instead of the six 64-bit memory controllers on the GM200 GPU on the GeForce GTX Titan X, the new GP104 GPU has eight 32-bit memory controllers, making for a narrower 256-bit memory bus. Again, this doesn’t mean that the Titan X comes out ahead. Combined with 8GB of GDDR5X memory clocked at 10,000MHz, the GeForce GTX 1080 can effectively lay claim to 320GB/s of bandwidth, just a hair short of the Titan X’s 336.5GB/s.

We’ve also included here a quick snapshot of the card’s specifications.

Coming from Maxwell, the key difference is that Polymorph Engine 4.0 (Maxwell uses version 3.0) now comes with a new component, called a Simultaneous Multi-Projection (SMP) unit. In a nutshell, this SMP unit is responsible for generating multiple projections of a single geometry stream, and can enable things like improved performance in VR applications, and also a better experience with surround displays (more on that later).

It essentially enables the GPU to process geometry through 16 different pre-configured projections from a single viewpoint in one pass. In terms of the graphics pipeline, a new multi-projection stage enters the pipeline after the geometry pipeline and downstream of the shader stages, so the GPU doesn’t have to repeat all the work for each projection. Furthermore, because each primitive – a basic graphics building block such as a point or line – may appear in multiple projections at the same time, the SMP engine allows the game or application to instruct the GPU to replicate it up to 32 times (16 projections multiplied by two viewpoints for stereo images) without any additional overhead.

All these processes are hardware accelerated, never once leaving the chip, and they are responsible for Pascal’s ability to deliver multiple projections – a feature which has several useful applications as we’ll see soon – with minimal impact on performance.

Enabling more immersive experiences

Now here’s where it gets interesting. It’s all nice and well to talk about multiple projections and geometry pipelines, but what you probably care about is if these will translate into any tangible gains that you can actually perceive. Well, the answer is yes.

For all the advances in display and graphics technologies, previous generation graphics pipelines still worked on the assumption that they would be rendering to a single, flat screen. However, a cursory survey of today’s hardware shows that this assumption no longer fits. Increasingly powerful graphics hardware means that multi-display setups are becoming more feasible, and we are just seeing the first wave of virtual reality headsets from the likes of Oculus and HTC.

But with Pascal, we finally have the tools to maximize these immersive display setups.

Better support for multiple displays

First off, we have something NVIDIA calls Perspective Surround. This directly addresses multi-monitor setups, typically comprised of three monitors, with the two outer panels positioned at an angle to “envelope” the user. The problem before was that the final image output by GPUs still assumed a single, flat projection ‒ where the monitors were located in a straight line ‒ even though they clearly aren’t. The result was warping in the images projected to the peripheral monitors, with visual distortions like lines that don’t match up across the monitors.

One way around this was to have a multiple GPUs, where each GPU would handle a display on its own. Unfortunately, this was costly, and probably wasn’t worth the cost and effort in the first place.

Pascal changes this with its SMP Engine, which allows the rendering of multiple projections in a single pass. Perspective Surround can be configured to know that three active projections are required, leveraging on the SMP unit’s ability to render scenes from multiple perspectives in order to output an appropriate image from the right angle. And because it is all rendered in a single pass, you get the best of both worlds with no performance loss and a more immersive multi-monitor experience.

Improved VR performance

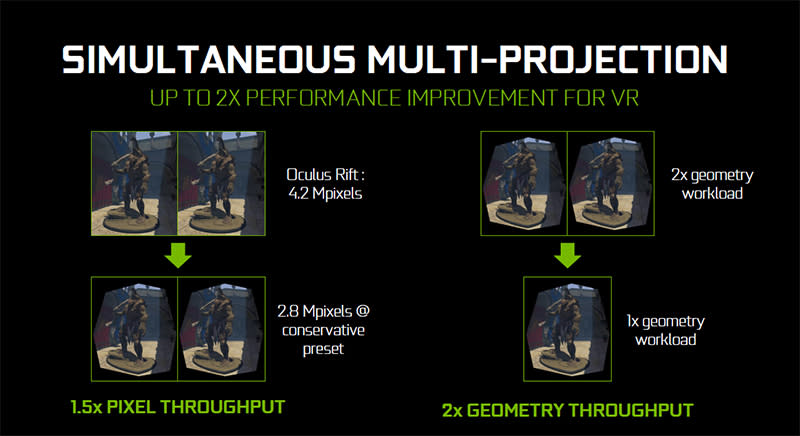

This concept applies to VR as well. Since VR headsets need two projections to be generated for each eye in order to produce a stereo image, they ran into similar hurdles as multi-monitor configurations. As a result, GPUs needed more than one pass to render an image for each eye, which translated into twice the workload and a whole lot of inefficiencies.

However, Pascal’s SMP engine supports two separate projection centers, where the two positions represent locations of a certain vertex – basically an angular point of some shape or other in a scene – as viewed from the left and right eye. These two stereo projections can then be rendered in just a single pass, a capability known as Single Pass Stereo. This means that all the pipeline work and geometry processing has to take place only once, but is still output twice, saving considerable resources.

Then there’s something NVIDIA calls Lens Matched Shading, which relies on the SMP engine’s support for multiple projections as well. There was a precursor to this in second-generation Maxwell, called Viewport Multicast. This divided each image into discrete viewports, which were in turn scaled to a different size based on the maximum sampling resolution needed for that portion of the image. The end result was that while the center of the image remained more or less the same from when it was first rendered to when it was displayed on the lens, the outer viewports were scaled down to avoid wasting resources on rendering peripheral pixels that weren’t needed.

Lens Matched Shading is a more advanced implementation of this. The SMP engine divides the display into four quadrants, which each have their own projection plane. Traditionally, GPUs render a planar projection before warping it to take into account the lens distortion, but this was inefficient because a lot of pixels that were rendered in the first step were ultimately not displayed. Lens Matched Shading corrects these inefficiencies by avoiding the extra work. Instead, it attempts to render an image at the first pass that matches the lens distortion as closely as possible, which keeps it from generating more pixels than needed.

That’s all pretty neat, and according to NVIDIA, the cumulative effect of these two technologies – both Single Pass Stereo and Lens Matched Shading – can double VR performance over a GPU without SMP support.

The first GDDR5X card

The other major change from the Maxwell flagship is the GeForce GTX 1080’s use of GDDR5X memory (the GeForce GTX 1070 still uses GDDR5). As we’ve noted before, HBM2 is still fairly expensive to produce, and GDDR5X provides a way to boost memory bandwidth while still keeping costs in check.

The GeForce GTX 1080 features 8GB of GDDR5X memory, clocked at a 10Gbps transfer rate. That seems pretty high at first, until you consider the fact that Micron’s chips are actually rated for up to 13Gbps and maybe even higher. Nevertheless, this might actually be a deliberate choice, as a low starting point gives all parties more breathing room to scale up in the future.

GDDR5X’s main improvement over GDDR5 is its use of quad-data rate (QDR) bus in place of a double-data rate (DDR) bus. This effectively doubles the rate at which data can be transmitted, all without needing to raise clock speeds.

In addition to bandwidth improvements, NVIDIA says that it has designed a new circuit architecture to go with the higher transfer rates enabled by GDDR5X. Great attention has also been given to the channel between the GPU and memory die, in order to minimize any signal degradation that might impede performance.

The final feather in the cap is GDDR5X’s lower voltage of 1.35 volts, which combines with the circuit and channel improvements to bring about a far more power efficient operation.

But further adding onto GDDR5X’s bandwidth improvements is also an enhanced memory compression pipeline. To put things simply, this is brought about by better color compression modes, which reduces the size of the data that have to be fetched from the memory per frame. In a word, this frees up around 20 percent of additional effective bandwidth for use in other areas that could boost performance.

Nevertheless, the fact remains that GDDR5X doesn't solve the problems of GDDR5 memory, like soon-to-be untenable power consumption, which was what prompted AMD to adopt HBM in the first place. HBM2 is the future, and it's likely that flagship cards will go down that route, with GDDR5X being reserved for mid-range models.

Finally, hardware support for asynchronous compute

Asynchronous compute is one of the rare cases where NVIDIA is playing catch-up with AMD, but catch up it has. While Maxwell cards lagged behind their AMD counterparts in DirectX 12 games like Ashes of the Singularity because of the lack of proper hardware support for asynchronous compute, that is happily no longer the case.

We’ve briefly touched on asynchronous compute before in our review of the AMD Radeon R7 370 and R9 380. But to recap, asynchronous compute is a term that describes the ability to simultaneously process complex – comprising multiple independent or “asynchronous” tasks – workloads

These workloads are separated into graphics and compute tasks, but because they sometimes overlap with each other, and usually utilize different GPU resources, the GPU can boost performance by running these workloads simultaneously instead of serially. This amounts to more efficient resource utilization, and one example could be a PhysX workload (compute) running concurrently with graphics rendering (graphics).

To address this, Pascal has implemented something called dynamic load balancing, which allows either compute or graphics workloads to maximize use of the GPU’s resources if they are freed up. In comparison, Maxwell relied on static partitioning of the GPU into subsets for graphics and compute workloads. This worked well if the balance of work between the two matched the partitioning ratio. But if there was an imbalance and one was taking longer than the other, and both needed to be completed for the pipeline to move forward, the partition handling the quicker task would end up idling, possibly resulting in reduced performance.

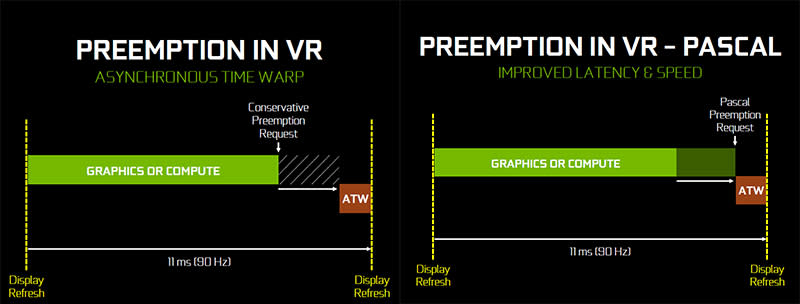

NVIDIA also improved Pascal’s pre-emption capabilities with a feature called Pixel Level Preemption. To cut a long story short, this means Pascal can interrupt a longer task in order to switch to a more time-sensitive workload with very low latency involved, which potentially benefits VR gaming features like asynchronous time warp (ATW). Because ATW works by inserting a repeated frame (corrected for head position) in the event that the GPU cannot keep up with the display's refresh rate, it ideally runs as late as possible to get the most accurate positional information from the headset's sensors. However, before Pascal's pre-emption improvements, ATW requests had to be submitted several milliseconds in advance, leading to greater perceived latency. With Pascal, the finer-grained pre-emption allows ATW requests to be submitted much later.

GPU Boost 3.0 and Fast Sync

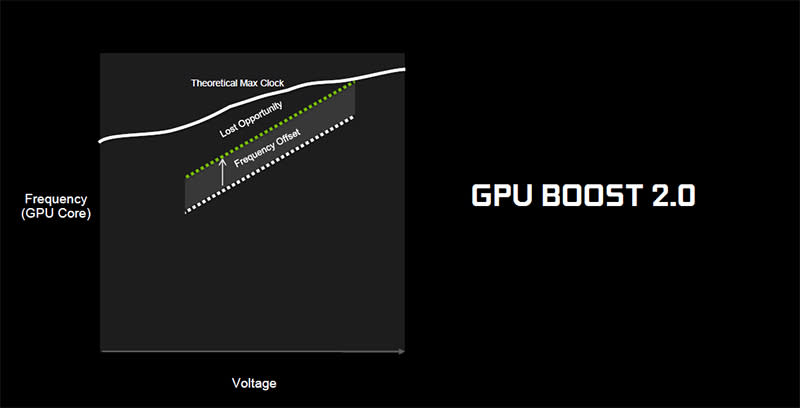

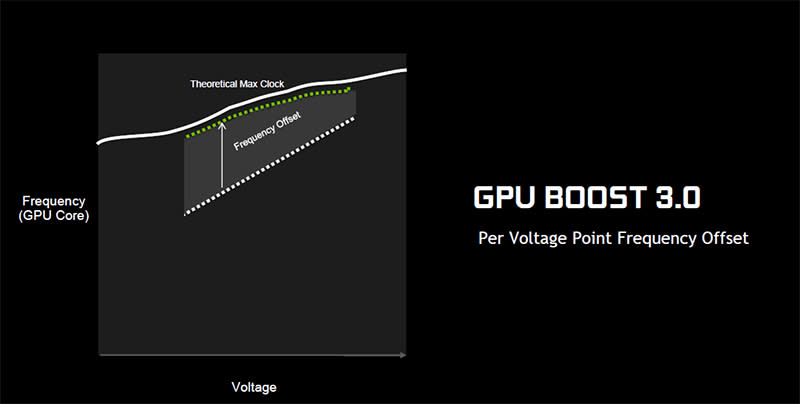

Pascal also sees the introduction of a new version of GPU Boost. With GPU Boost 3.0, NVIDIA now offers custom per-voltage point frequency offsets, allowing the GPU to maximize the headroom beyond a fixed frequency offset. It is a natural evolution of GPU Boost 2.0 – while the latter could already dynamically vary the boost speed according to temperature and load, it remained limited to a fixed frequency offset across all voltages. The problem with this is that the resulting voltage/frequency curve isn’t necessarily in sync with what the GPU is really capable of, resulting in lost potential headroom.

GPU Boost 3.0's support for per-voltage offsets corrects this. By adding an additional parameter to vary the frequency offset by – in this case voltage – any lost performance opportunity can be minimized.

Also new is support for overclocking scanners, like the OC Scanner utility now built into the latest version of EVGA’s Precision XOC software, that automatically stresses the GPU and creates a custom V/F curve unique to your individual card. That effectively takes some of the guesswork out of overclocking, allowing you to get more out of the card more quickly and easily.

On the other hand, FastSync is yet another technology to provide a smoother, tear-free, and low latency gaming experience in the tradition of V-Sync and G-Sync, except it has nothing to do with the panel's refresh rate. However, while G-Sync sees the most benefit in demanding games that operate below your monitor’s refresh rate, FastSync targets less demanding titles that can output frames well in excess of the panel’s refresh rate. You can enable it as a new option in the NVIDIA Control Panel.

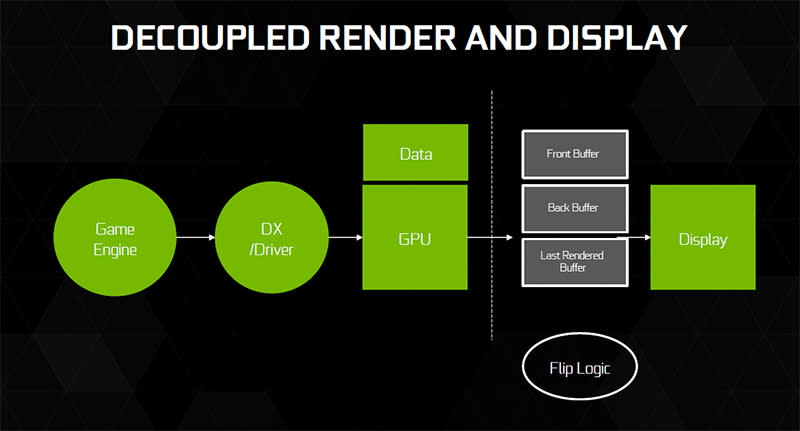

One such game it addresses is Counter-Strike: Global Offensive, which is typically capable of running in the range of 200 to 300fps on high-end cards. FastSync’s objectives are to provide a low latency gaming experience, while still eliminating tearing, and it does this by decoupling the frame rendering and display stages from the rest of the pipeline.

This allows the GPU to continue to work as fast as it can, and store excess frames temporarily in the GPU frame buffer. If V-Sync were on, the game engine would have to wait for the display to refresh, creating a backpressure of frames and increasing input latency. With FastSync, the GPU can render frames unhindered, so there is no backpressure and latency is reduced, but it also cherrypicks frames from the frame buffer to display, so tearing is avoided as well.

This is made possible by the addition of a third frame buffer called the Last Rendered Buffer (LRB) to the existing front and back buffers. As its name suggests, the LRB holds the most recently rendered back buffer, until the front buffer finishes scanning. The LRB is then copied to the front buffer, and the process repeats itself. But the crucial thing to note is that the LRB and back buffer follow a flip logic, so they effectively take turns holding the latest rendered frame while waiting for a scan interval to complete, which is how the pipeline can continue without slowing down.

A new single-GPU king

This may be a reference design (sorry, we really mean Founders Edition), but NVIDIA has nevertheless made some design improvements under the hood.

Compared to its predecessors, the new Founders Edition card sports an upgraded 5-phase power delivery circuit (it was a 4-phase design before), extra capacitance to the filtering network, and optimizations for lower impedance. In addition to gains in power efficiency, NVIDIA claims this also results in less voltage noise, which could potentially lead to better overclocking.

The card is cooled by a typical vapor chamber design and a blower fan that exhausts heat out the back, so it’s quite suitable for small form factor systems as you don’t want heat accumulating inside one of those tiny cases.

This is also the first reference card we’ve seen to come with a metal backplate, which protects the PCB and adds rigidity to the card.

Then there’s the diecast aluminum body that’s really quite a joy to look at. The design represents a departure from previous reference cards, featuring what NVIDIA is calling a “faceted” body that is all sharp ridges and angular lines. This is one of the most aggressively designed cards we’ve seen from NVIDIA, and it seems almost befitting of the performance it dishes out.

Here’s a look at the single 8-pin PCIe connector, which is all that’s needed to power the 180-watt card.

Round the back, there are three DisplayPort 1.4 connectors, one HDMI 2.0b port, and a dual-link DVI output. We should note that these all represent the latest display standards, which also happen to be HDR ready. Earlier this year, AMD announced that all its next generation GPUs would also support HDR displays and content, in addition to higher resolution, higher refresh rate panels with DisplayPort 1.3, so it’s really no surprise that NVIDIA has done the same here as well. You can refer to our coverage of AMD ‘s announcement for more details on what HDR and the new standards entail.

Finally, NVIDIA also announced a new SLI interface, starting with the GeForce GTX 1080. The card officially only supports two-way SLI, which may come as a surprise to some enthusiasts. According to NVIDIA, challenges in achieving meaningful performance scaling improvements in three- and four-way setups led it to its decision to officially support only two-way SLI, although it will still be possible for hardcore users to go beyond two cards if they wish.

NVIDIA also announced a new high bandwidth (HB) SLI bridge, which combines both SLI connectors to enable a dual-link mode. The creates a higher bandwidth link between the two cards, the primary benefit of which will be felt mostly at higher resolutions and refresh rates.

Test Setup

The detailed specifications of our current graphics card testbed system is as follows:-

Intel Core i7-5960X

ASUS X99-Pro (Intel X99 chipset) motherboard

2 x 4GB Corsair Vengeance LPX DDR4-2133 (Auto timings: CAS 15-15-15-36)

Samsung SSD 840 Pro 256GB SATA 6Gbps solid state drive (OS + benchmark + games)

Western Digital Caviar Black 1TB SATA 6Gbps hard drive (general storage)

Windows 7 Ultimate 64-bit SP1

Intel INF 10.0.20

NVIDIA supplied us with two versions of the beta drivers, ForceWare 368.13 and 368.16. We used the former for benchmarking, but the latter is required for overclocking. There are no performance differences between the two. For our comparison cards, we naturally went with NVIDIA’s top-performing cards from the last generation, including the previous single-GPU king, the GeForce GTX Titan X, and custom versions of the GeForce GTX 980 Ti and 980. Note that these custom cards feature significant factory overclocks over their reference versions, which is why the performance numbers obtained don’t exactly line up with NVIDIA’s claims on its marketing material. In addition, we threw in the AMD Radeon Pro Duo, a dual Fiji card, to get an idea of how the GeForce GTX 1080 performs against a dual GPU part.

The full line-up of graphics cards and their driver versions are listed below:

NVIDIA GeForce GTX 1080 (ForceWare 368.13)

NVIDIA GeForce GTX Titan X (ForceWare 365.19)

ASUS ROG Matrix GTX 980 Ti Platinum (ForceWare 365.19)

MSI GeForce GTX 980 Gaming 4G (ForceWare 365.19)

AMD Radeon Pro Duo (Crimson Edition 16.5.2.1)

— | — |

|

| — |

— | — | — |

| — |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| — |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Benchmarks

We’ve updated our performance benchmarks to include a handful of the latest titles. Two benchmark games, Hitman and Ashes of the Singularity, also take advantage of DirectX 12, so we were also able to get a look at how the GeForce GTX 1080 performed using Microsoft’s latest gaming API.

Here’s the list of the benchmarks we used:

Futuremark 3DMark (2013)

Middle-earth: Shadow of Mordor

Crysis 3

Tom Clancy’s The Division

Ashes of the Singularity

Hitman

We used the Fire Strike Extreme test in 3DMark (2013) for our power and temperature tests.

Futuremark 3DMark (2013)

The NVIDIA GeForce GTX 1080 wasted no time demonstrating its chops in 3DMark. It consistently topped all of NVIDIA’s previous top performers, and only fell behind the AMD Radeon Pro Duo, which is a dual-GPU card. And even then, the performance difference between the two was actually quite slim, not at all resembling the lead you’d expect a dual-GPU card to take over a single-GPU counterpart.

In Fire Strike, the Radeon Pro Duo was only around 13 percent faster than the GeForce GTX 1080. Nevertheless, this lead widened to over 20 percent, which is still not exactly a commanding lead, in Fire Strike Extreme and Ultra, no doubt due to the Radeon Pro Duo’s staggering bandwidth advantage thanks to the combined bandwidth of two Fiji GPUs with HBM.

Compared to the GeForce GTX Titan X, the GeForce GTX 1080 turned out to be around 13 percent faster in Fire Strike – not bad at all for a card that also happens to be US$300 cheaper. Even more impressive was the fact that this advantage grew to an approximately 20 percent lead in Fire Strike Extreme and Ultra, which certainly bodes well for more demanding applications like 4K gaming and VR. Not every card can claim to have beaten last generation’s undisputed king by such a large margin, and this isn’t even a Titan-class card.

Middle-earth: Shadow of Mordor

In Shadow of Mordor, the GeForce GTX 1080 came ahead of everything else as well, save for the Radeon Pro Duo. At a resolution of 2,560 x 1,600 pixels, the flagship Pascal card was a whopping 32 percent faster than the GeForce GTX Titan X. It maintained this lead at a 4K resolution, where it was also around 17 percent faster than our factory overclocked GeForce GTX 980 Ti. As it turns out, the heavy out-of-the-box overclocks of the latter even helped it edge out the reference GeForce GTX Titan X, but it was still no match for the GeForce GTX 1080.

How much faster is it than the GeForce GTX 980, the card it ostensibly replaces? Well, a staggering 63 percent at a 4K resolution.

Crysis 3

The GeForce GTX 1080 continued on a tear in Crysis 3. And unfortunately for the Radeon Pro Duo, NVIDIA’s latest flagship really exposes some glaring weaknesses in it. In our benchmarks with MSAA turned off, the GeForce GTX 1080 came out on top of all the other cards. That turned out to be around 24 percent faster than the GeForce GTX Titan X at a quad HD resolution, and 11 percent quicker than the factory overclocked 980 Ti. And sadly for the GeForce GTX 980, it was left in the dust once again as the new card powered ahead to a 52 percent lead.

At our most demanding test settings (2,560 x 1,600 pixels, 8x MSAA), the Radeon Pro Duo managed to reclaim the lead, but the GeForce GTX 1080 continued to hold sway over everyone else.

Tom Clancy’s The Division

In The Division, the GeForce GTX 1080 likewise continued to edge out the previous generation cards, beating AMD’s dual-GPU flagship as well. At the most demanding settings, it was around 13 percent faster than the GeForce GTX Titan X. It also had a 7 percent lead over the factory overclocked 980 Ti.

We’re actually seeing a consistent pattern of the overclocked 980 Ti outperforming the reference Titan X here, so it will be interesting to see how much custom versions of the GeForce GTX 1080 cost in order to get a better idea of its value vis-à-vis a premium 980 Ti card like the ASUS ROG Matrix GTX 980 Ti Platinum.

Benchmarks - Part 2

Ashes of the Singularity

Now here’s where it starts to get even more interesting. Ashes of the Singularity supports both DirectX 11 and 12, so we were able to benchmark the cards in both modes to see how well they were able to take advantage of DX12 features like asynchronous compute. This is also the game in which AMD has held a strong advantage over NVIDIA, thanks in large part to GCN’s asynchronous shaders. However, it looks like NVIDIA has wrested back control in the battle to maximize the potential of DX12.

Unlike its Maxwell cards that eeked out paltry gains at best when moving from DX11 to 12, the GeForce GTX 1080 demonstrated a marked 19 percent improvement in performance at a 1080p resolution and High settings. However, the performance boost was whittled down as we ramped up the resolution and settings, until there was barely any improvement at the most demanding settings. This was in contrast to the Radeon Pro Duo, which still managed to post a 24 percent improvement upon switching to DX12.

NVIDIA and AMD both support asynchronous compute slightly differently, so it may be that Ashes is better able to take advantage of AMD’s implementation.

Hitman

Note: The AMD Radeon Pro Duo ran into some glitches while running in DirectX 11 mode in Hitman. Not only did the benchmark take inordinately long to load, it consistently crashed with all the settings set to maximum, which was why we were unable to obtain a score for our final DX11 benchmark

Like Ashes of the Singularity, 2016’s Hitman supports both DirectX 11 and 12 as well, and it provides another way to test the GeForce GTX 1080’s DX12 performance. The GeForce GTX 1080 actually had a particularly strong showing in Hitman, coming in at around 17 percent faster than the ASUS ROG Matrix GeForce GTX 980 Ti Platinum while running DX12. The lead over the GeForce GTX Titan X was even larger, at an impressive 33 percent. What about the GeForce GTX 980 you ask? Well, the new Pascal card is still an eye-popping 62 percent faster.

On top of that, the GeForce GTX 1080 posted decent performance improvements when moving from DX11 to 12. One interesting thing to note is that the gains are a lot larger at a 1080p resolution, regardless of the other settings. For instance, while it enjoyed a 20 percent improvement at a 1080p resolution and Ultra settings, it only had a modest 10 percent gain at 1600p.

Temperature and Power Consumption

There isn’t much to remark on in the temperature department. At 82°C, the GeForce GTX 1080 performed more or less in line of the Titan X, which uses a similar cooling design. The other cards utilized custom coolers, so it’s no surprise that they wound up being quite a bit cooler.

But be that as it may, do note that our readings were obtained over an extended period of looping 3DMark Fire Strike Extreme, and temperatures during real-world use are actually quite a bit lower. Furthermore, the blower-style fan proved quite effective at bringing temperatures down quickly. In fact, we noticed the temperature was able to drop from around 60°C to 40°C in a matter of seconds once the card stopped working so hard.

The standout characteristic here is really the power consumption. Thanks to the 16nm FinFET process and various other efficiency tweaks, the total system power consumption for the GeForce GTX 1080 was a mere 286 watts in our power consumption tests, around the same as the much weaker GeForce GTX 980. Clearly, a lot can change in just one generation.

Overclocking

Using a beta version of EVGA’s Precision XOC utility provided to us by NVIDIA, we were able to achieve a 246MHz overclock over the base speed, and boost the memory clock to 10,704MHz. However, one quirk is that these changes are not reflected in GPU-Z, even though the GPU is clearly being overclocked, as evinced by our benchmark scores (we’re not sure if this is a result of GPU Boost 3.0, or if it’s merely a glitch in the beta software or GPU-Z). This ultimately translated into a boost clock of 2,114MHz, although our monitoring software showed that it only peaked at this level at the most demanding scenes.

In 3DMark, this resulted in anywhere between a 5 and 10 percent boost in performance. That may not seem like a lot, but bear in mind that this is just a synthetic benchmark, and your mileage in actual games may very well vary.

The reason GeForce GTX Titan X owners are going to bed in tears

So there you have it. The GeForce GTX 1080, NVIDIA’s latest and greatest card. Pascal brings a lot of new features to the table, not least of which are markedly improved power inefficiency, bleeding edge performance, and new ways to improve our experience with VR headsets and multi-display setups.

In a lot of ways, Pascal seems like a dream come true. After all, this is the card that schooled the US$999 GeForce GTX Titan X – the previous gold standard for performance you want but can’t afford – in our benchmarks, all while costing only US$699. It also quite successfully harnesses the potential of DirectX 12, while also positioning itself to capitalize on the upcoming wave of VR hardware. To cap things off, it even introduced GPU Boost 3.0, a new version of GPU Boost that helps the card perform closer to its true potential.

The only dampener is the US$100 premium NVIDIA has slapped on its Founders Edition cards. The company wants to turn these into its own line of profit, and AMD’s lack of an appropriate riposte has allowed them to do so. Competition is always in the best interests of consumers, and the lack of it is worrying in that the market leader – in this case NVIDIA – may get complacent, which means less competitive products, and feel no pressure to make pricing attractive. Here's how much you can expect it to retail in Singapore.

But for now at least, NVIDIA is still on top of its game and has truly outdone itself this time. We’ll look at the Crysis 3 benchmark to illustrate our point, since that’s the one benchmark we’ve kept constant. When the GeForce GTX 980 first launched, it turned out to be only around 5 percent faster than the GeForce GTX 780 in Crysis 3. That’s pretty underwhelming when taken alone, but once you consider that the GeForce GTX 1080 has consistently been over 60 percent faster than the 980, things start to really take on new perspective.

Of course, the most valid comparisons at this point are with the GeForce GTX Titan X and 980 Ti, both of which are still beaten quite handily by the GeForce GTX 1080. The interesting thing is that NVIDIA may have just introduced a new performance class here. This is not a Ti- or Titan-level card, but it dishes out even more performance than last generation’s cutting-edge flagships. There’s clearly a cascading effect at work here, and the performance in the ultra-enthusiast tier has officially trickled down in huge gobs into the merely “high-end” category. What’s more, the GeForce GTX 1070 costs just US$449, but supposedly offers Titan X levels of performance.

If this is just the beginning of what Pascal has to offer, we can’t wait to see what Pascal’s Ti or Titan-class cards will bring to the table (HBM2 please?), if and when NVIDIA decides to release one. Technological progress is truly a great thing to behold.