Speech recognition system can transcribe Singaporean lingo in real time

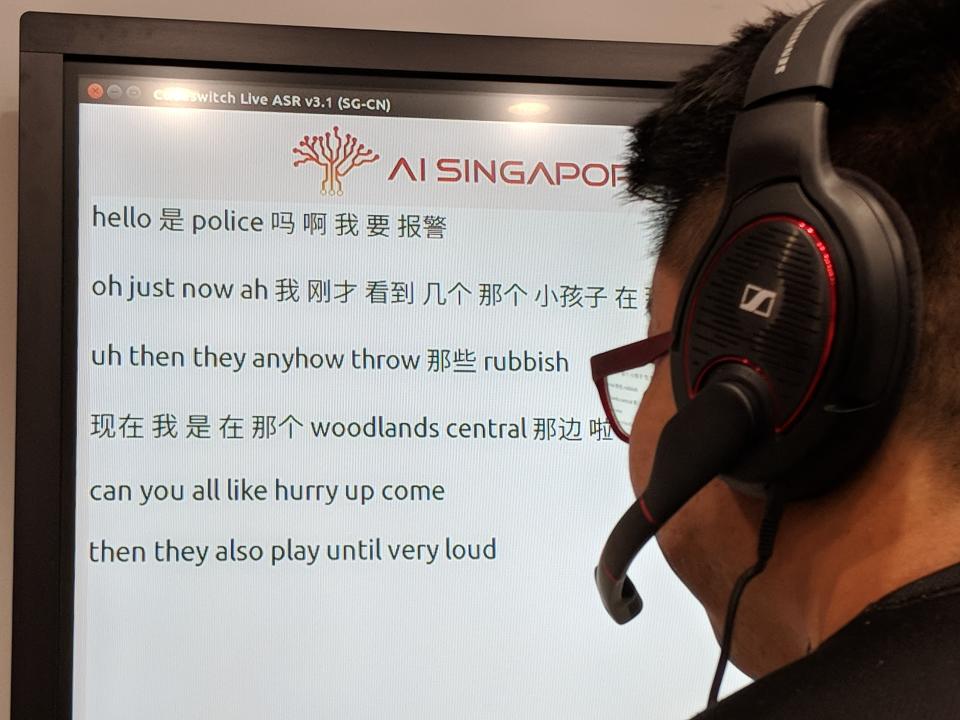

“Hello, 是 police 吗? 啊, 我要报警…uh then they anyhow throw 那些 rubbish…can you all like hurry up come?”

This phone call could have been made by a Singaporean resident to the police. But it was actually a simulated emergency call, transcribed by a homegrown speech recognition system that is able to interpret Singaporean lingo on the fly.

Demonstrated live to the media on Monday (9 July), the system is being developed by the Republic’s national programme in artificial intelligence, AI Singapore (AISG), in its AI Speech Lab.

The unique speech engine, named Southeast Asian Mandarin-English (SEAME), is touted by its creators to be adept at recognising “code-switching” – the practice of alternating between two or more languages in conversation.

The system now has a combined vocabulary of about 80,000 words, mostly in English and Mandarin. It can adapt to local accents of spoken English.

“Our technological breakthrough is the outcome of…research efforts in Singapore (that started a decade ago),” said National University of Singapore (NUS) Professor Li Haizhou, who co-leads the speech lab. “This technology performs better than commercial engines as it can accurately recognise conversations comprising words from different languages and solves a unique Singaporean problem.”

The system is currently “90 per cent accurate in a quiet environment”, according to Prof Li, Department of Electrical and Computer Engineering and Department of Mechanical Engineering at the NUS.

For now, its Hokkien, Malay, Tamil and Singlish lexicon is limited to basic phrases like “jiak ba bueh”, “hoh boh” (“Have you eaten?” and “How are you?” in Hokkien, respectively), “lah”, “loh”, as well as food and street names such as char kway teow, nasi lemak and Jalan Besar.

When more data is collected, Prof Li’s team may expand the system’s code-switching capabilities to include English and Tamil or English and Malay.

The team is working on a “stable” version for commercial use to be ready in 12 to 18 months. Their first partner? The Singapore Civil Defence Force (SCDF).

“Our first step is to make the “code-switching” work (better),” said Prof Li. “Our next step is to work with partners to include vocabulary they would like to incorporate. For example, if it’s the SCDF, things will be concerning the deployment of medical services, or fire-related (incidents).”

The SCDF’s 995 Operations Centre receives close to 200,000 calls for assistance every year, according to Assistant Commissioner Daniel Seet, SCDF Director of Operations, and the new system, “if successful, will help (operators) reduce the time needed to log in information” relating to emergencies.

The team will use the upcoming months to harvest data from real calls made to the SCDF centre, said the lab’s co-lead Assistant Professor Chng Eng Siong, School of Computer Science & Engineering at the Nanyang Technological University (NTU).

“We will visit the site and collect the calls (excluding personal data)…as many as possible, hundreds of hours of SCDF calls. We will send people down (to the site) to transcribe and run our engine through their system to study our problems and errors,” said Asst. Prof Chng.

The system currently has about up to 2,000 hours of audio data, with about half from Singapore sources, including conversations on the ground, as well as audio clips on radio station 938NOW and from Singaporean YouTubers. In comparison, search giant Google has about 10,000 hours to train its AI system, said Asst. Prof Chng.

Over the last three to four years, the team has collected conversational data in Singapore and Penang, cities where code-switching between English and Mandarin is more common in the region.

Code-switching poses a huge challenge because the system has to deal with verbal input as if it is a single language, said Asst. Prof Chng. Different pronunciations of the same word posed further challenges, he added.

When asked whether the system would be able to handle translation in future, Prof Li said, “If translation (capabilities) are needed, that can be done in the lab as well. We have the technology to do this.”

In due course, the system could be deployed at various government agencies and companies to assist frontline officers while they focus on customer service, as well as in areas such as voice data mining and live subtitling, said Prof Li.

“For public services, we cannot pump our conversations to Google. We don’t want our confidential conversations to go into a company’s database so we need to have our own Singapore-based speech recognition engine to serve our citizens,” he added.

AISG is investing $1.25 million to set up the speech lab, with four government agencies on board to match the investment, bringing total funding to $2.5 million over the next three years.

Located within the National University of Singapore’s Kent Ridge campus, the currently operational lab occupies a floor area of 125 sqm, and will eventually have five AI engineers.

The lab marks AISG’s first major collaboration with multiple government agencies to design an AI system that could be deployed government-wide or nation-wide.

Other Singapore stories:

oBike responsible for refunding user deposits: Janil Puthucheary

Malaysia ‘lost its right’ to revise water agreement in 1987: Vivian Balakrishnan