What AI mavens can learn from a 1975 genetics conference

In March, more than 10,000 people—including Elon Musk and Apple co-founder Steve Wozniak—signed an online letter urging all AI labs to hit pause on training systems more powerful than ChatGPT-4. The letter’s concerns over AI run deeper than disruptions to the job market or the information ecosystem. They deal with “unknown unknowns”: risks that we cannot imagine yet, but that will emerge as companies rush into an arms race to develop increasingly powerful AI tools.

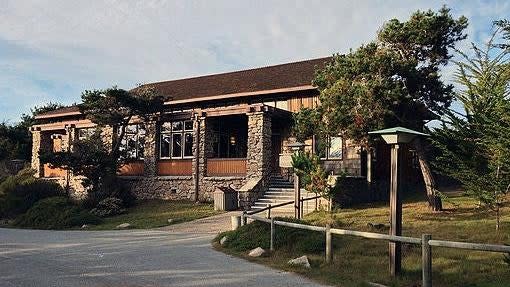

The letter cited the Asilomar AI Principles, developed by computer scientists at a 2017 conference. The 23 principles call for a planned, managed approach to developing “beneficial,” rather than undirected, AI. The venue for this gathering, the oceanside Asilomar Conference Grounds in California, was picked deliberately. It was at this very place, in 1975, that geneticists convened to draw up voluntary guidelines on how to use and manipulate DNA in their research.

Read more

Those guidelines still resonate in genetics today, and cautious AI scientists want to build their own version for their field. (The US government is worried as well; in May, the Federal Trade Commission warned tech companies not to shed employees working on AI ethics, and the White House has summoned Google and Microsoft executives to talk about AI safeguards.) So what can AI researchers learn from a genetics conference held in Asilomar half a century ago?

1. Communicate the unknown risks of new technology

The 1975 conference was born out of a concern about recombinant DNA technology, which uses enzymes and lab techniques to create new genes. Despite knowing that these advances could lead to new opportunities in medicine and agriculture, scientists fretted that they might inadvertently produce cancer-causing agents, Paul Berg, a former Stanford biochemist, wrote in Nature in 2008.

Paul Berg

In mid-1974, Berg led a committee that voiced these concerns to the National Academy of Sciences and published them in well-known journals. They urged scientists to observe a voluntary moratorium on some kinds of recombinant DNA experiments until their dangers could be evaluated. Their remarks created a media buzz and sparked scientific debate, Berg wrote. The Asilomar conference was born, in part, as a result of that firestorm. Berg’s committee showed how important it was for experts to go public with their misgivings—as Geoffrey Hinton, one of the world’s top computer scientists, did on May 1, when he quit Google to speak freely about the risks of AI.

2. Establish safety guidelines for AI

In 1975, Berg and four other scientists drew up guidelines for the three-and-a-half-day Asilomar conference, to decide whether and under what conditions to lift the voluntary moratorium. During the conference, amid heated discussions, scientists acknowledged the risks of other experiments but not their own, Berg observed. Another scientist warned about the consequences of doing nothing, which would lead to public backlash, government interference, or legislation, Berg wrote in Nature.

In the end, experts assigned risk estimates to different experiments, applying safety guidelines accordingly. On the final day of the conference, participants agreed that research should continue but under strict limits, helping to form the basis of the official US guidelines on research involving recombinant DNA issued in July 1976. “They have proved remarkably effective,” Berg said. The Asilomar AI conference from 2017, as yet, only has principles; what AI needs is industrywide agreement on what kinds of research hold hazards, and how to limit them.

3. Oversight is no bad thing

The 1975 guidelines spread in a way that welcomed oversight. Some attendees went to university leaders to argue that new security facilities were now required for their work, according to the National Institutes of Health. A review body, US National Institute of Health Recombinant DNA Advisory Committee, was founded in 1976 and still functions. In a 1990 meeting, for instance, one of its tasks was to consider the extension of an experiment to insert a recombinant gene into patients as a marker for new therapeutic approaches to cancer. In the UK, the Advisory Committee on Genetic Modification advises the Health and Safety Executive and Ministry of Environment, the authority that regulates the use of recombinant DNA technology in the country.

A more informal but also more potent form of oversight came from the public. About 15% of Asilomar’s participants in 1975 were from the media, according to Berg, so the public had a window on the proceedings. Many scientists feared that public debates would hinder innovation in the field; instead, Berg thinks it encouraged responsible discussions.

4. The problem of Big Tech

One key difference between genetics in 1975 and AI today lies in the institutions involved. In the 1970s, most scientists engaged in recombinant DNA research worked in public institutions, so they were able to get together and voice their opinions, Berg wrote. But most AI scientists work for private companies, so the line between the public and private sectors is blurred. Many issues in science and technology are “beset by economic-self interest,” Berg added.

A handful of powerful companies drive generative AI today, and secrecy comes naturally to them. OpenAI doesn’t offer details of how its model is trained, citing the “competitive landscape and safety implications” of GPT-4. Chatbot creators have admitted that their AI tools aren’t perfect, yet that hasn’t stopped them from releasing products in an effort to stay ahead of rivals.

Last month, in front of a Congressional panel, Aleksander Mądry, a professor of computing at the Massachusetts Institute of Technology, urged lawmakers to ask rigorous questions about the technology being deployed. He also stressed the importance of not letting the future of AI lie solely within the hands of a few tech companies.

Since Asilomar 1975, genetics researchers have carried out recombinant DNA experiments without any major reported incidents, Berg wrote. A search on Google doesn’t show any headlines with concerns over splicing DNA. Once the Asilomar guidelines took root over time, a biotech industry emerged. Starting in the mid-1980s, genetically modified hormones—such as those used to enhance food crops—and medical diagnostic tools were born out of this new technology. At least thus far, five decades after Asilomar, the biotech industry has safely created products, jobs, and wealth. It is a model to which the AI field could well aspire.

More from Quartz

Sign up for Quartz's Newsletter. For the latest news, Facebook, Twitter and Instagram.