Performance Review: NVIDIA GeForce GTX 980 Ti

The future of PC Gaming: DirectX 12, 4K gaming, and virtual reality

Following the release of the beast of a graphics card that was the NVIDIA GeForce GTX Titan X, rumors swirled that NVIDIA would be releasing another high-end card to fill the gap between the GeForce GTX 980 and the Titan X. As it turns out, the rumors are true. NVIDIA today took the wraps off the GeForce GTX 980 Ti, a GM200-based card that purports to offer performance similar to the Titan X but with less video memory. And given that AMD is poised to release its Radeon R9 300 series of graphics cards this June, we’re guessing that the timing of NVIDIA’s launch is no coincidence.

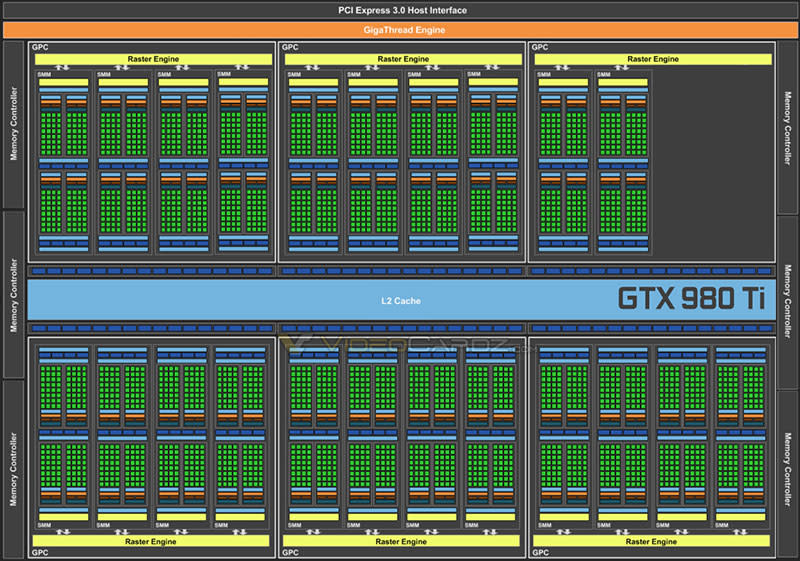

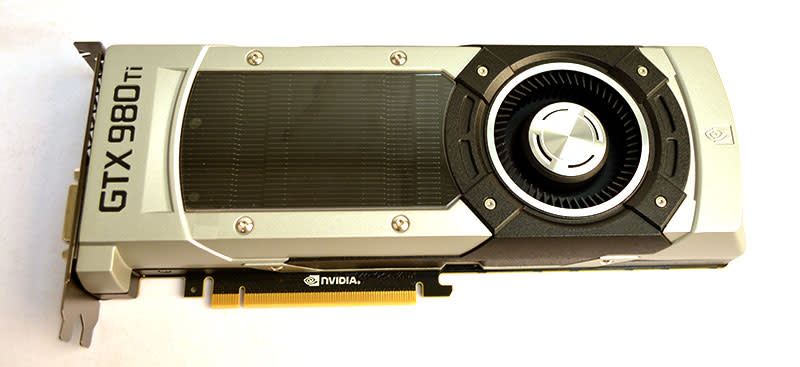

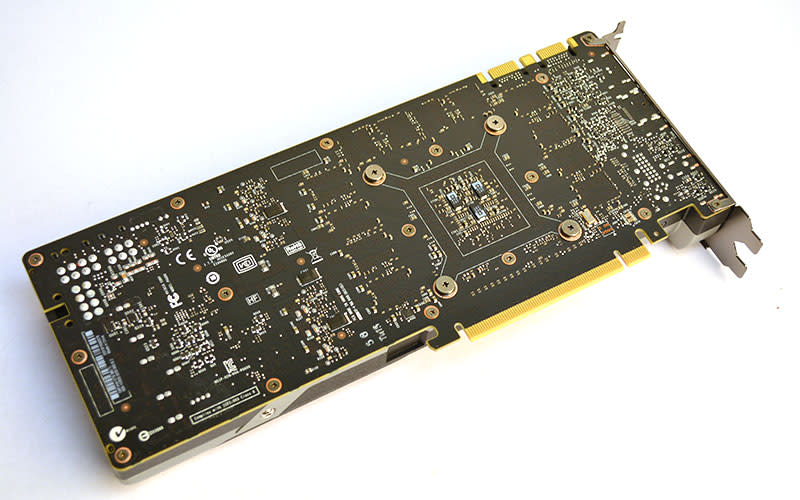

At first glance, NVIDIA’s new card appears quite similar to the GeForce GTX Titan X, save for the fact that its cooling shroud is a slate gray instead of black. Under the shroud, it features a GM200 GPU that packs a stunning 8 billion transistors like the Titan X. However, unlike the GeForce GTX 780 Ti which turned out to be even more powerful than 2013’s Titan, the GeForce GTX 980 Ti ships with 22 Streaming Multiprocessors (SMM) and 2816 CUDA cores, down from 24 SMMs and 3072 cores on the Titan X.

The GeForce GTX 980 Ti also sports 176 texture mapping units (TMUs), putting it right smack between the GeForce GTX Titan X and the 980. However, it retains six Graphics Processing Clusters (GPCs) like the Titan X, giving it a 384-bit memory bus width to deal with memory-hungry 4K resolutions and VR gaming. In addition, the new card boasts 6GB of GDDR5 memory with an effective memory clock of 7010MHz. This puts its peak memory bandwidth at 336.5GB/s, 50% higher than that of the GeForce GTX 980.

The card runs at a base clock frequency of 1000MHz and a boost clock frequency of 1075MHz. However, the boost clock frequency is actually an average figure that is derived from the speeds the card usually boosts to over a series of real-world applications and does not represent the maximum clock speed the card can boost to. As NVIDIA was careful to point out, the card could very well boost to even higher clock speeds under heavier workloads and conditions.

In terms of video connectivity options, the card has three DisplayPort outputs, one HDMI port and one dual-link DVI port. The card will of course also support G-Sync, NVIDIA’s variable refresh rate technology that aims to eliminate screen tearing and stutter.

And with a Thermal Design Power (TDP) of 250 watts, it’s powered by two PCIe graphics power connectors - a six-pin and eight-pin connectors.

Here’s a look at how the GeForce GTX 980 Ti compares against other enthusiast graphics card SKUs in the market:-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| — |

|

|

|

|

|

|

|

|

With the impending release of AMD’s next-generation cards, NVIDIA could hardly be content to let the red camp steal all the thunder, and it’s hoping that the new GeForce GTX 980 Ti will lead the charge towards what it sees as the next level of PC gaming. In its view, three new technologies are quickly reshaping the gaming landscape – DirectX 12, ultra-high resolution 4K gaming, and virtual reality (VR). The GeForce GTX 980 Ti is designed to deliver performance in all these key areas.

Next-generation API: DirectX feature level 12.1

The NVIDIA GeForce GTX 980 Ti ushers in DirectX feature level 12.1, which enables developers to take advantage of new features like Volume Tile Resources, Conservative Raster and Raster Ordered Views. All second-generation Maxwell cards will also support these features. While the low-level DirectX 12 API allows developers to work more closely with the hardware and gives them more control over the GPU’s resources in order to reduce CPU overhead and boost performance, DirectX feature level 12.1 builds on this foundation to further improve efficiency and graphics realism.

Volume Tiled Resources improves upon the implementation of Tiled Resources in DirectX 12. The latter method breaks down textures into tiles, so instead of streaming all the textures indiscriminately - which can be quite inefficient and graphically taxing - only the tiles which are required for rendering are stored in the GPU’s memory. This effectively allows game developers to produce higher fidelity graphics while using less memory.

However, one limitation of Tile Resources is that it’s limited to 2D, planar objects only. Volume Tiled Resources changes that with the addition of a third parameter. Now the efficiency of Tiled Resources can be applied to objects which span all three axes, or in other words, 3D textures. Many visual effects used in games are volumetric in nature, such as fluids, clouds, smoke, fire and fog. Volume Tiled Resources allows the GPU to use its memory even more efficiently, which will in turn allow developers to incorporate more intricate details into their games, like smoke that is generated using sparse fluid simulation for greater realism.

Another key feature of DirectX feature level 12.1 is Conservative Raster, which is essentially a more accurate method for determining whether or not a pixel is covered by a texture primitive, the building blocks of a texture in a scene. In traditional rasterization, a pixel is only considered covered if the primitive covers a specific sample point within that pixel, for instance, the center of the pixel.

On the other hand, conservative rasterization rules dictate that a pixel is considered covered if any part of it is covered by a primitive. By providing hardware acceleration for the process, the GPU can perform these calculations more efficiently. This enables game developers to employ new approaches to improve image quality, and conservative raster can prove very useful in certain cases, like generating ray-traced shadows that are free from anti-aliasing or gaps.

Ultra-high resolution 4K gaming

The GeForce GTX 980 Ti was also designed with 4K gaming in mind. While peak memory bandwidth plays an important role in a GPU’s performance, especially at ultra-high resolutions, various bottlenecks within the memory subsystem can also prevent the GPU from achieving its peak performance. To minimize this issue, NVIDIA’s second-generation Maxwell GPUs – which now includes the GeForce GTX 980 Ti – employ a new memory architecture that is designed to allow the GPU to use its memory bandwidth more effectively.

Each of the GM200 GPU’s SMM units features its own dedicated 96KB of shared memory, while the L1 and texture caches are combined into a 48KB pool of memory per SMM. In comparison, previous-generation Kepler GPUs were designed with shared memory and L1 cache sharing the same on-chip storage. By merging the functionality of the L1 and texture caches in each SMM, NVIDIA has allowed each SMM to have its own dedicated space of shared memory on GM200. In addition, the GM200 GPU is equipped with 3MB of L2 cache.

And when combined with the GeForce GTX 980 Ti’s 384-bit memory bus width – up from 256-bit on the GeForce GTX 980 – NVIDIA says the new card is fully capable of churning out playable frame rates in the latest games at 4K resolutions.

Multi-res shading for improved VR performance

The GeForce GTX 980 Ti also features support for multi-res shading, which purports to provide a 1.3 to 2x improvement in pixel shader performance over traditional rendering for VR. VR headsets utilize a specially designed lens to create the focus and field of view for an immersive experience. But because the lens introduces its own distortions of an image, the display renders a warped fisheye image to match the optical properties of the lens. The rendered display image features an enlarged center but a compressed periphery, but when viewed through the lens, the viewer sees a correctly proportioned image which allows them to focus on the center.

However, this method is actually rather inefficient because GPUs are designed to render to a 2D screen and cannot natively render the fisheye images needed for VR. To get around this, the GPU first renders the image normally and then warps the image periphery just before it is sent out to the display. This solution works, but because the GPU has to render the entire scene in full detail first before warping the image for a VR display, a lot of pixels are lost. This means that the GPU has essentially wasted resources rendering pixels that are never used.

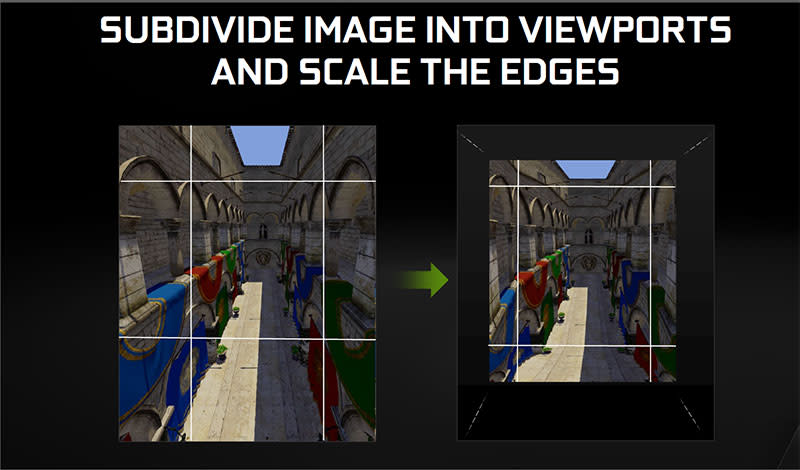

NVIDIA’s multi-res shading intends to address this issue and improve the GPU’s efficiency by utilizing the multi-projection engine – you may know this as something called Viewport Multicast – that is found on all second-generation Maxwell GPUs. Each image is divided into discrete viewports, which are in turn scaled to a different size based on the maximum sampling resolution needed within that portion of the image. While the center viewport stays the same size, much like in traditional rendering, the outer viewports are scaled down to match the lower resolutions needed in the image periphery.

Viewport Multicast accelerates the geometry broadcast stage that’s used to create the various viewports. Instead of rendering the scene in multiple passes for each viewport, the engine renders the scene’s geometry and sends it out to the relevant viewports all in a single pass. This results in a far more efficient rendering process for the GPU. And given that VR is far more unforgiving when it comes to performance drops than traditional flat screen displays, every boost in performance counts.

Test Setup

These are the specifications of our graphics test bed:

Intel Core i7-3960X (3.3GHz)

ASUS P9X79 Pro (Intel X79 chipset) Motherboard

4 x 2GB DDR3-1600 G.Skill Ripjaws Memory

Seagate 7200.10 200GB SATA hard drive (OS)

Western Digital Caviar Black 7200 RPM 1TB SATA hard drive (Benchmarks + Games)

Windows 7 Ultimate SP1 64-bit

NVIDIA supplied us with version 352.90 of its GeForce drivers, so that’s what we’ll be testing the card with. Given that it’s such a powerful card that’s designed to drive 4K displays, we’ve included more demanding benchmarks like 3DMark Fire Strike Ultra and enabled ultra HD textures on Middle-earth: Shadow of Mordor. To get an idea of how demanding the latter test is, graphics cards need to have 6GB of video memory to run it properly. Furthermore, we also scaled up the resolutions on our 2,560 x 1,600-pixel Dell UltraSharp U3011 monitor to 3,840 x 2,400 pixels (150%) and 5,120 x 3,200 pixels (200%) during our benchmarks to see how capable the card is at ultra-high resolutions.

A card like the GeForce GTX 980 Ti deserves to be compared with the best, and we’ve decided to pit it against the likes of the GeForce GTX Titan X, MSI GeForce GTX 980 Gaming 4G and the dual-Vesuvius GPU AMD Radeon R9 295X2. As a side note, we’ve used MSI’s custom version of the GeForce GTX 980 instead of the reference version because we want to focus on the ultra-high resolution gaming performance of the GeForce GTX 980 Ti, and we don’t yet have these scores available for the reference GeForce GTX 980.

In addition, we’ve updated the drivers of the Titan X and MSI GeForce GTX 980 Gaming 4G to ForceWare 350.12 for more updated figures to compare against.

The full line-up of graphics cards and their driver versions are listed below:-

NVIDIA GeForce GTX 980 Ti 6GB GDDR5 (ForceWare 352.90)

MSI GeForce GTX 980 Gaming 4G GDDR5 (ForceWare 350.12)

NVIDIA GeForce GTX Titan X 12GB GDDR5 (ForceWare 350.12)

ASUS Radeon R9 295X2 8GB GDDR5 (AMD Catalyst 14.4)

Benchmarks

Before we dive into the results, here’s a list of the benchmarks used:-

Futuremark 3DMark 2013

Middle-earth: Shadow of Mordor

Crysis 3

3DMark 2013 was also used in our temperature, power consumption and overclock testing.

Benchmark Results

3DMark 2013

Unsurprisingly, the dual-GPU AMD Radeon R9 295X2 manages to best all the single-GPU cards here, but not by big margin. The NVIDIA GeForce GTX 980 Ti comes in right between the MSI GeForce GTX 980 Gaming 4G and the GeForce GTX Titan X in all three benchmarks, which is exactly how NVIDIA is positioning it. It was also on average about 20% faster than the MSI GeForce GTX 980 Gaming 4G across the board. The fact that it was able to so consistently maintain this lead is quite impressive, especially in Fire Strike Ultra, which renders at an ultra-high resolution of 3,840 x 2,160 pixels. It was likely helped in this area by its 6GB of video memory and wider 384-bit memory bus width, which means that it’s actually not that far behind the GeForce GTX Titan X.

In 3DMark Fire Strike Ultra, the Titan X was just under 4% faster than the GeForce GTX 980 Ti, which reflects quite favorably on the latter card. Despite its 12GB of video memory, we’re not seeing a proportionate increase in ultra-high resolution performance – this is likely a case of the Titan X running into diminishing returns at some point.

Middle-earth: Shadow of Mordor

The AMD Radeon R9 295X2 is almost a non-factor here, with dismal performance on this NVIDIA-backed title. This is a NVIDIA-only shoot out, and Shadow of Mordor shows just how narrow the gap is between the NVIDIA GeForce GTX 980 Ti and the Titan X. With just a few frames of difference between the two up to and including the highest tested resolution (5,120 x 3,200 pixels), the GeForce GTX 980 Ti demonstrates that it can hold its own against the reigning single-GPU champion. Clearly, you’re not going to see the benefit of all 12GB of the Titan X’s video memory even at the highest display resolutions.

The difference between the NVIDIA GeForce GTX 980 Ti and the MSI GeForce GTX 980 was much greater. At a resolution of 2560 x 1600 pixels, the NVIDIA GeForce GTX 980 Ti was 24% faster than the MSI card, which is quite a significant difference.

Crysis 3

The difference between the NVIDIA GeForce GTX 980 Ti and the MSI card isn’t as pronounced in Crysis 3. There was about an 11% difference between the two at a resolution of 1920 x 1200 pixels with anti-aliasing turned off. Nevertheless, we see a similar pattern where the GeForce GTX 980 Ti trails the Titan X by a small margin, and it again demonstrates that it has the chops to take on NVIDIA’s most powerful card. We don’t have figures for the AMD Radeon R9 295X2 for Crysis 3, so we’ve omitted it from the charts this time.

Temperature and Power Consumption

The GeForce GTX 980 features the same vapor chamber and blower-style fan that we saw on the Titan X and most of NVIDIA’s recent reference designs. Both cards have a TDP of 250 watts and posted a GPU temperature of 82°C, so they clearly have very similar thermal properties. They also ran quite a bit hotter than MSI’s custom GeForce GTX 980, but that’s to be expected given that the MSI sports a custom cooler that has been specially tweaked for cooling performance.

When it came to power consumption, the GeForce GTX 980 Ti and Titan X recorded a peak power consumption of 425 and 426 watts respectively. There’s little to differentiate the two when it comes to temperature and power for both cards, perhaps due to the fact that they are both based on the same GM200 GPU.

Overclocking

Unfortunately, we only have overclocking results for the Titan X at this point in time so that’s what we’ll be comparing the newcomer against. Also, since it is the card that’s closest to the NVIDIA GeForce GTX 980 Ti in NVIDIA’s single-GPU Maxwell line-up, we feel it’s quite a valid comparison.

After applying an overvolt of 24mV in EVGA PrecisionX 16, we managed to boost the base clock of the GeForce GTX 980Ti by 227MHz to 1227MHz, which translates into a boost clock of 1303MHz. We also managed to increase the memory data rate to 7612MHz. This resulted in a 6% increase in performance in 3DMark Fire Strike and the performance boost increased to 11% in 3DMark Fire Strike Ultra.

On the Titan X, we achieved a slightly lower overclock of 1220MHz (boost clock: 1296MHz) but a much higher memory data rate of 7802MHz. This translated to a miniscule performance improvement of under 1% in 3DMark Fire Strike, but this widened to an almost 13% improvement in Fire Strike Ultra. It appears that both cards are so powerful that they are running up against a performance plateau at lower 1920 x 1080 resolutions. Instead, they require more demanding 4K resolutions to see the real benefit of their overclock.

After overclocking, the GeForce GTX Titan X was just under 5% faster than the 980 Ti. Both cards deliver more or less the same performance increase from overclocking, but the ability to push the memory clock speed higher in the Titan X might mean that it would benefit more at higher resolutions.

Giving the Titan X a run for its money

After the release of the NVIDIA GeForce GTX Titan X back in March, it seemed inevitable that any other high-performance card would be forced to be evaluated in the shadow of NVIDIA’s newly-crowned performance king. Indeed, we’ve used the Titan X as the primary yardstick to assess the performance of the NVIDIA GeForce 980 Ti, but as the card shows, it’s inches from crawling out of the shadow of its bigger GM200 brother.

NVIDIA intends to position the GeForce 980 Ti between the GeForce GTX 980 and Titan X, which is precisely what our performance numbers show as well. However, happily enough (or maybe not so for Titan X owners), the GeForce GTX 980 Ti seems to be located far closer to the Titan X’s end of the spectrum.

Titan X owners who bought the card for bragging rights may breathe a sigh of relief. You still own the world’s fastest single-GPU graphics card. The Titan X is still the undisputed ultra-enthusiast card for those who want the absolute best in single-GPU performance with zero compromises. However, the GeForce GTX 980 Ti may now be emerging as a viable alternative for even the most demanding gamers. Furthermore, it is significantly faster than the MSI GeForce GTX 980, which already sports factory overclocks over the reference card.

The NVIDIA GeForce GTX 980 Ti has a launch price of US$649, which puts it right in the middle of the GeForce GTX 980 (revised to US$499) and Titan X (US$999). For US$200 more than the GTX 980, you get a card that's quite a bit faster and is literally breathing down the neck of the Titan X in terms of performance. It's not cheap for sure, but we'd say it offers quite decent bang for your buck, especially when compared with the Titan X.

NVIDIA is betting on DirectX 12, 4K and VR to shape the future of PC gaming, and the GeForce GTX 980 Ti is just the latest gambit in its efforts to enable both gamers and developers to take advantage of new features and improved performance. By delivering a relatively more affordable card, NVIDIA is bringing the future that it has envisioned to a slightly wider audience. And in the long run, this also means that these new technologies and performance are one small step closer to the masses.