Taylor Swift and AI ‘Deep Fakes’: The Threat to Artists is Real, What’s the Solution?

Just a few days ago, disturbing Taylor Swift deep fakes flooded the social media feeds of her fans. “X” marked the spot for ground zero. Shocking, I know, because Elon Musk cares so much about “safety,” even as apologist-in-chief Linda Yaccarino tries to woo back advertisers.

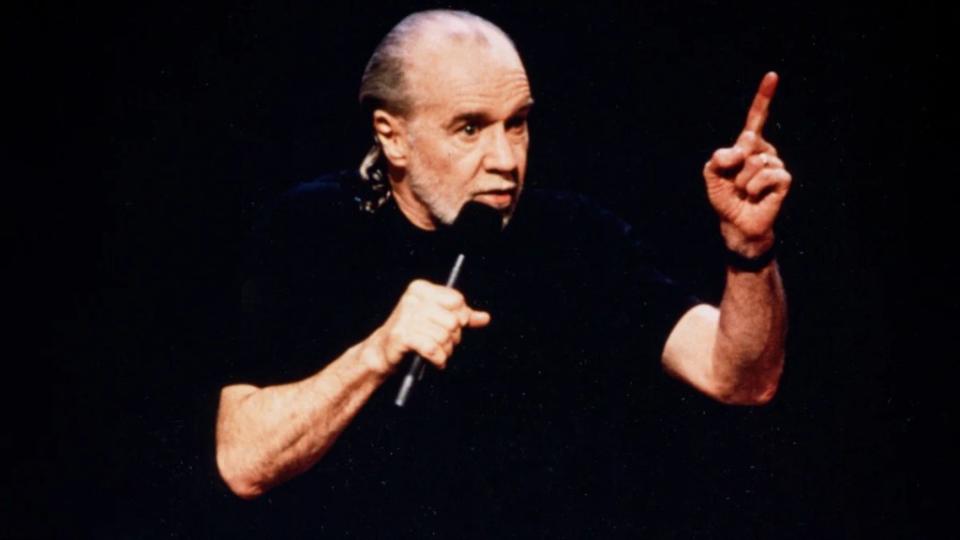

A few days earlier, before the New Hampshire primary, fake President Joe Biden robocalls flooded the phones of voters, urging them not to vote. And now, a deep fake George Carlin “performs” a new comedy special on YouTube, although no one from his estate gave any consent.

The transformational power, promise and peril of generative AI is now sinking into a broad swath of the entertainment community. These examples are just the canary in the coal mine for the threats and harm — commercial and reputational — we will see in the months and years ahead.

So what can and should we all do about it now?

First, it’s critical to simply be all over it, monitoring these developments so that we understand them and take action. No matter what role we play in the creative economy, we should also experiment with generative AI to understand what it can do. To be clear, generative AI certainly will enable us to do great things, several examples of which I laid out in an earlier column.

One notable area of opportunity is licensed AI dubbing of film and television, which enables widespread localized international distribution, no matter what the original language is. Los Angeles based Flawless AI is a leader here. Its tech will seamlessly enable actors to speak in multiple languages while their mouths perfectly match their multi-lingual words. That means no subtitles are needed which, in turn, maximizes distribution, audience receptivity, and monetization.

But on the flip side, we also need to take action to mitigate the peril of generative AI gone wild. The examples above reflect outright theft and abuse of the names, images, likenesses and voices of famous individuals that result in both significant commercial and reputational harm. In the case of President Biden, the harm goes even further – it challenges democracy itself.

WIRED reported that the identity hacker behind those Biden fakes likely used AI tools developed by Silicon Valley-based “startup” ElevenLabs, which just recently scored $80 million in new financing at a $1.1 billion valuation in order to add Hollywood AI dubbing to its pursuits. After this report was made public, the company suspended the relevant user’s account. It also says all the right things about such abuse in its FAQ’s and terms and conditions. That’s where it notifies users that consent is required for “voice cloning” and indicates it will take down content that crosses that line once they are notified about it.

The Silicon Valley playbook

That all sounds great, of course. But this entire episode arguably follows the typical Silicon Valley playbook. Blue chip VC-backed tech startups create new enabling tech that requires compelling content from their users to become valuable. Then, their lawyers draft the right-sounding policies that instruct those users to follow basic rules of copyright and privacy. But those policies are frequently buried in boilerplate and used to provide defense when users break them, which they know is happening on their platforms.

And why not? It’s in the interests of these tech companies not to add friction to the growth of their user base. That makes them more valuable. It’s better to ask for forgiveness than permission, right? In ElevenLabs’ case, I found a lot of discussion of the ease and quality of their “voice cloning” tech on the company’s homepage, but essentially none of it required consent. I reached out to the company two times to correct any misunderstandings I may have had and to get them to respond, but I received none.

We saw an analogous episode nearly two decades ago when YouTube first launched and users happily uploaded copyrighted videos by the millions. SNL’s “Lazy Sunday” rap spoof video became the poster child for this new kind of abuse, and Viacom (now Paramount) sued. Google ultimately swooped in to save the day for YouTube, buying the company and settling the litigation. Now Google’s valuation sits at $1.75 trillion, while SNL owner Comcast NBCUniversal’s sits at about 1/10th at $187 billion — and that number includes Comcast’s lucrative broadband business.

Perhaps some of ElevenLabs’ $80 million fresh bounty should be invested in so-called “trust and safety” initiatives to minimize the risk of user abuse. It’s all a question of will, of course. I’m confident that brilliant tech engineers can find ways to prevent abuse if resources are allocated to that goal. As my favorite media-tech pundit Scott Galloway would say, “it’s not about the realm of what is possible. It’s about the realm of what is profitable.” Money talks and money reflects priorities. Growth for growth’s sake may be great for venture capitalists, but it certainly isn’t always great for the artists and creators on whose backs multi-trillion dollar Big Tech companies are significantly made.

AI “forensic” technology already exists to combat AI deepfake abuse. A recent tantalizing example includes Nightshade which reportedly uses AI to combat deepfake AI. Another company that says it is focused on combating such abuse is Wolfsbane AI. Meanwhile, blockchain tech is apparently capable of “creating an immutable audit chain to see if consent was given.” That quote comes directly from noted venture capitalist Chris Dixon of Andreessen Horowitz, which just happens to be a major investor in ElevenLabs.

Given these latest – but certainly not isolated or latest – disturbing fakes, does anyone really think we have sufficient guardrails out there to protect individuals and their livelihoods, including artists in the creative community?

What we need here, in addition to self-control on the part of developers of AI tech and AI tech used to fight AI abuse, is a direct dialog with the Hollywood and creative community. Only then will mutually beneficial basic rules of the game and proper economics be established. Flawless understands this, precisely because top execs come from Hollywood and the creative community themselves. Silicon Valley entrepreneurs and VC’s take note.

We also need stiffer criminal penalties and greater visibility and advocacy on the issues of non-consensual name, image, likeness and voice abuse. While California has statutes on the books to address those NIL issues, most states don’t. Congress is looking at national legislation right now to fix this patchwork, while SAG-AFTRA and the Human Artistry Campaign are shining their spotlights on the issue.

All of us – the creative and tech communities together – must solve these issues one way or another right now before generative AI generates rewards only for itself — and at the expense, literally, of the creative community.

Reach out to Peter at peter@creativemedia.biz. For those of you interested in learning more, sign up to his “Fearless Media” newsletter, visit his firm Creative Media at creativemedia.biz, and follow him on Threads @pcsathy.

The post Taylor Swift and AI ‘Deep Fakes’: The Threat to Artists is Real, What’s the Solution? appeared first on TheWrap.